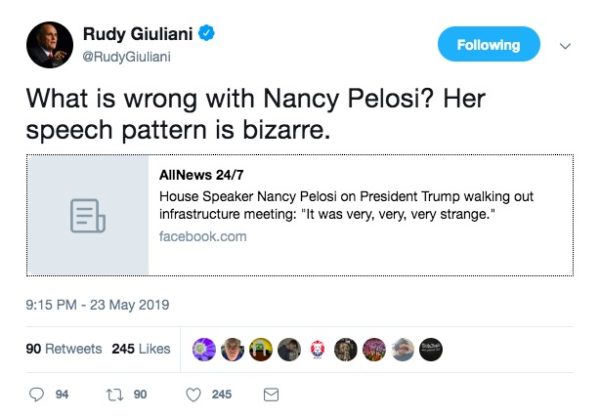

On May 23, 2019, Rudy Giuliani (then-President Trump’s personal attorney and advisor) tweeted what appeared to be a video of House Speaker Nancy Pelosi at a Center for American Progress event in a drunken state. “What is wrong with Nancy Pelosi? Her speech pattern is bizarre,” Giuliani asked as thousands of users watched and shared the video. Shortly after, however, the tweet was labeled a violation of Twitter’s “manipulated media” policy and Giuliani subsequently deleted it (Mervosh, 2019). While the origins of the video remain unknown, it was greatly altered from its original state – Pelosi’s speed of speech was slowed by 75% and adjustments were made to the pitch in order to make it appear like her real voice (Harwell, 2019). This masterfully-manipulated video of Pelosi is just one example in the rise of “cheapfakes” being published and spread online. Unlike “deepfakes,” which “rely on experimental machine learning” to “hybridize or generate human bodies and faces,” cheapfakes manipulate audio-visual media with “cheap, accessible software [such as photoshop], or no software at all” (Paris & Donovan, 2019). Thus, nearly anyone who has a computer, the technological know-how, and the desire to do so can create a cheapfake. While little concern may be necessary when such doctored media is used for personal or comedic purposes, the use of cheapfakes in political communication has become a topic of controversy.

On one hand, some have argued that cheapfakes like the one of Pelosi are only the most recent evolution in “a long and rich history… of candidates lying about their opponents” (KCET TV). Indeed, even before the United States became an independent nation, the founding fathers themselves regularly engaged in questionable strategies for political gain. For example, Thomas Jefferson would refer to John Adams as a monarchist, and Adams accused Jefferson of being an atheist – two of the “worst thing[s] you could say about someone back then” (KCET TV). Though these claims were completely false, both men hoped to sway public opinion in their own favor by ruining the other’s reputation. Whether we are proud of it or not, techniques like spinning the truth and using misleading statements have always been part of the “blood sport” that is American politics (KCET TV). In this way, Giuliani’s behavior was not all that different from what has been happening for centuries – only his form of communication was more technologically-advanced.

Furthermore, manipulation strategies in political campaigns have historically been protected under the First Amendment because “voters have a right to uncensored information from candidates, to evaluate information, and to make decisions for themselves” (Nott, 2020). This line of reasoning is, at its core, non-elitist, because it assumes that everyday citizens can be trusted to rationally think through the claims presented by politicians, conduct their own research, and come to the truth on their own without being guided by some authority. Spin does not pre-determine an audience’s reaction, in other words. So, if a politician lies throughout their campaign, the public will know and vote accordingly.

On the other hand, some have argued that cheapfakes present grave challenges for establishing ethical political communication guidelines. Indeed, if a common reality is necessary for democracy to work, then cheapfakes potentially pose a serious threat to the overall system – especially when shared by highly influential figures. While it may be true that Americans have always had to sift through the messages of politicians and judge the accuracy for themselves, this task has become significantly more complicated since the digital revolution. Not only are new, but simple, technologies like cheapfakes designed to be convincing, but the sheer amount of information constantly available to us can overwhelm citizens to the point that they are not as critical of media as they might be otherwise. Kamarack also points out that “even if a lie is too outrageous for most people to believe, in a tight race, only a very small fraction of the electorate needs to believe it” (Kamarack, 2019). Thus, manipulated media has a real chance to skew election integrity.

Moreover, contrary to traditional political slander, the effect of new manipulated media like cheapfakes may harm American democracy through the shift it brings to power dynamics between the sender (a political candidate) and the receiver (citizens) (Paris & Donovan, 2019). Historically, lying in a political advertisement has always been legal, but older mediums like broadcast radio and television have also had to maintain certain regulations under the Federal Communications Commission since they hold limited airway space (Nott, 2020). Unlike private publishers who can legally be held liable for libel and defamation, social media companies hold very little liability for the political ads they run as an internet service provider (Nott, 2020). Especially in an era where more and more people are getting their news solely from social media, if no authority is regulating online content and the public is not checking other sources, politicians with especially dedicated social media followership may become demagogues who influence their followers’ very view of reality. And the old concern of telling a lie from persuasive spin also arises in cheapfakes—aren’t many video editing techniques calculated to sell the point of the clip’s producer? If one wanted to get rid of cheapfakes, how could they do so without also including persuasive spin and potentially misleading but effective editing and filming techniques?

Cheapfakes are unlikely to disappear any time soon and, in fact, will only become more difficult to detect as AV manipulation techniques continue to advance. Furthermore, widespread public awareness of the technology may result in unintended consequences, such as “an excessively skeptical environment… [where] liars [can] deny responsibility for true events” by blaming cheapfakes (Chesney, Citron, and Jurecic, 2019). While it may sound far-fetched, there is already evidence that supports such a possibility, including former-President Trump’s suggestion that a video of him making disparaging comments about women “was not authentic,” despite his previously admitting to and apologizing for the conduct (Martin, Haberman, & Burns, 2017). In the end, it seems that as cheapfakes become more sophisticated, the boundary between the online and the physical world blurs a bit more. Are cheapfakes simply a new, albeit unconventional, advertising tactic in line with already-established methods of political campaigning? Or dangerous manipulations that hold the power to bring “serious harm to our democracy” (Paris & Donovan, 2019)? Perhaps only time will tell.

Discussion Questions:

- What ethical tensions does manipulated political media place on voters? What about on democracy as a whole?

- Are cheapfakes deeply harmful for American democracy or are they simply a new form of dirty campaigning tricks that have always existed?

- What regulations, if any, should govern what politicians post about each other online? Who should set these guidelines – the government or private platforms?

- Is it possible to persuade someone to vote a certain way without manipulating them? How would you explain the difference between persuasion and manipulation?

Further Information:

Chesney, R., Citron, D., & Jurecic, Q. (2019, May 29). “About That Pelosi Video: What to Do About ‘Cheapfakes’ in 2020.” Lawfare. Available at: https://www.lawfareblog.com/about-pelosi-video-what-do-about-cheapfakes-2020

Harwell, D. (2019, May 24). “Faked Pelosi Videos, Slowed to Make her Appear Drunk, Spread Across Social Media.” The Washington Post. Available at: https://www.washingtonpost.com/technology/2019/05/23/faked-pelosi-videos-slowed-make-her-appear-drunk-spread-across-social-media/?utm_

Kamarack, E. (2019, July 11). “A Short History of Campaign Dirty Tricks before Twitter and Facebook.” Brookings. Available at: https://www.brookings.edu/blog/fixgov/2019/07/11/a-short-history-of-campaign-dirty-tricks-before-twitter-and-facebook/

KCET TV. “SoCal Connected Documentary: The Long and Dirty History of Political Ad Campaigns.” KCET TV. Available at: https://www.kcet.org/shows/socal-connected/clip/the-long-and-dirty-history-of-political-ad-campaigns

Martin, J., Haberman, M., & Burns, A. (2017, November 25). “Why Trump Stands by Roy Moore, even as it Fractures His Party.” The New York Times. Available at: https://www.nytimes.com/2017/11/25/us/politics/trump-roy-moore-mcconnell-alabama-senate.html?module=inline

Mervosh, S. (2019, May 24). “Distorted Videos of Nancy Pelosi Spread on Facebook and Twitter, Helped by Trump.” The New York Times. Available at: https://www.nytimes.com/2019/05/24/us/politics/pelosi-doctored-video.html

Nott, L. (2020, July 26). “Political Advertising on Social Media Platforms.” American Bar Association. Available at: https://www.americanbar.org/groups/crsj/publications/human_rights_magazine_home/voting-in-2020/political-advertising-on-social-media-platforms/

Paris, B. & Donovan, J. (2019, September 18). “Deepfakes and Cheap fakes: The Manipulation of Audio and Visual Evidence.” Data & Society. Available at: https://datasociety.net/library/deepfakes-and-cheap-fakes/

Spicer, R. (2020, March 4). Media Literacy and a Typology of Political Deceptions. In W. G. Christ & S. De Abreu (Eds.), Media Literacy in a Disruptive Media Environment. (1st ed., Chapter 9). Routledge. Available at: https://www.taylorfrancis.com/books/e/9780367814762/chapters/10.4324/9780367814762-12

Authors:

Chloe Young, Kat Williams, & Scott R. Stroud, Ph.D.

Media Ethics Initiative

Center for Media Engagement

University of Texas at Austin

October 19, 2021

Image: Twitter screen capture

This case was supported by funding from the John S. and James L. Knight Foundation. These cases can be used in unmodified PDF form in classroom or educational settings. For use in publications such as textbooks, readers, and other works, please contact the Center for Media Engagement.

Ethics Case Study © 2021 by Center for Media Engagement is licensed under CC BY-NC-SA 4.0