Freedom of speech is arguably the most valued right granted in the American constitution, but how should it be limited in speech that potentially affects the health of communities and individuals? This controversy has recently hit the world of social media in regard to the growing number of “anti-vax” groups, or communities of parents concerned about the supposed dangers of vaccinating their children. As the online presence of anti-vaccine messages continues to increase—and potentially threatens the health of children and communities—the calls for limiting the reach of such messages have grown louder. Should communication asserting messages that seem to be wrong, unhelpful, or potentially harmful be censored or “deplatformed” by private social media companies?

Many are worried about anti-vax messages and content because they seem to risk undoing the many gains of vaccination programs. With the recent surge of measles cases this year after declared nationally eliminated in 2000 (Centers for Disease Control and Prevention, 2018), the need for widespread vaccination is seemingly more evident than ever. It is very possible for diseases we thought dead to society to return if vaccination rates decline, and with a strength we would not be equipped to handle without large scale immunity. Anti-vax groups typically express messages about the supposed danger associated with immunization, and thus contribute to such risks by convincing many to refrain from vaccinating themselves or their children. To make matters worse, those who choose not to get vaccinated are not only risking hurting themselves, but also those around them. Declining rates of vaccinations decrease what’s called “herd immunity,” an epidemiologic term that refers to the resistance a population, including those who cannot be vaccinated such as those with autoimmune diseases, has to the spread of a certain disease if enough people are protected against it (Young, 2018).

The need for herd immunity, as well as concerns about the lack of scientific support for anti-vax messages, has prompted many social media outlets to restrict anti-vax content. For instance, Pinterest, online hotspot for sharing creative inspiration, has removed search results for the key word “vaccine” because of the influx of anti-vax articles (Thompson, 2019), YouTube has pulled advertisements and recommended videos related to incorporating anti-vax into parenting (Sands, 2019), Amazon has removed anti-vax documentaries and ads from Prime Video (Spangler, 2019), and Facebook is working to place lower priority on anti-vax search results and removing related groups from those recommended to users (Cohen & Bonifield, 2019). These actions appear justified to many, since the World Health Organization just labeled vaccine hesitancy as one of the top 10 health threats of 2019. Many believe that censoring or “deplatforming” seems to be the safest option for protecting the public, as erasing the materials will prevent such messages from affecting individuals’ decision-making.

Some might worry that these efforts to suppress the posting and spread of anti-vax content go too far. Shouldn’t people have control of their decision making, a skeptic might ask? This includes one’s decision to publish, view, and internalize anti-vax information. Social media is a crossroads for opinions on every subject—many of which seem incoherent and harmful to some segments of the population—so prohibiting one side of the vaccine debate might seem unproductive. There is also the concern about how to go about identifying “anti-vaxxer” content. YouTube describes anti-vax videos as content that violates the platform’s guidelines against “dangerous and harmful” content. Yet, the definition of dangerous and harmful can vary, and it is unclear that espousing an unscientific position is immediately dangerous. While some anti-vax materials may be exhibiting false scientific information, others may be simply expressing one’s point of view or skepticism. Additionally, information may be published regarding a religion or ideology’s reasoning for avoiding immunization that could be educational for followers or outsiders, at least in terms of informing them of why certain groups don’t support vaccination efforts. Social media efforts to censor anti-vax content quickly begin to look like efforts to sort religious or political views out by their alleged consequences. This relates to an abiding concern about censoring or stopping speech that some find objectionable or harmful—who judges these facts, and what errors are they prone to make? As Marko Mavrovic of the Prindle Post warns, “Once you no longer value free speech, it becomes much easier to justify eliminating speech that you simply disagree with or believe should not exist.” Beyond these worries are the concerns about unintended consequences: by removing or obscuring anti-vax content, social media might only provide anti-vaxxers with more “evidence” that powerful interests are trying to stop their messages about vaccines: “Demonetizing videos is likely to only affirm anti-vaxxer beliefs of being persecuted, making them more difficult to reach” (Sands, 2019). This could diminish any hope of changing the minds of anti-vaxxers. Additionally, the anti-vax censorship attempts thus far have been less fruitful than predicted, calling into question whether this movement is worth the effort. In analyzing the effects of suppression efforts, CNN recently reported that “misinformation about vaccines continues to thrive on Facebook and Instagram weeks after the companies vowed to reduce its distribution on their platforms” (Darcy, 2019).

It seems to be the consensus of scientists and experts that vaccines help many more than they risk harming, and that ensuring only true information about their effectiveness helps to create a healthy society. But how do we proceed down the road of deplatforming, limiting, or banning a certain sort of content without censoring content that is more reasonable or nuanced, stymieing unpopular opinions that might turn out to be right or somewhat correct, or leading to further backlashes by those censored?

Discussion Questions:

- What are the central values in conflict over the decision to deplatform or suppress anti-vaccination content on social media?

- Is this primarily a legal or ethical controversy? Explain your reasoning.

- Do you agree with attempts to deplatform certain speakers and messages from popular social media outlets? If not, would you suggest other courses of action to combat anti-vaxxer content?

- How might social media platforms deal with speech that seems to threaten public health while still valuing free speech?

Further Information:

Centers for Disease Control and Prevention. (2018, February 5). “Measles History.” Centers for Disease Control and Prevention. Available at: www.cdc.gov/measles/about/history.html.

Cohen, E. and Bonifield, J. (2019, February 26). “Facebook to Get Tougher on Anti-Vaxers.” CNN, Cable News Network. Available at: www.cnn.com/2019/02/25/health/facebook-anti-vaccine-content/index.html.

Darcy, O. (2019, March 21). “Vaccine misinformation flourishes on Facebook and Instagram weeks after promised crackdown.” CNN. Available at: www.cnn.com/2019/03/21/tech/vaccine-misinformation-facebook-instagram/index.html.

Mavrovik, M. (2018, September 18). “The Dangers and Ethics of Social Media Censorship.” The Prindle Post. Available at: www.prindlepost.org/2018/09/the-dangers-and-ethics-of-social-media-censorship/.

Sands, M. (2019, February 25). “Is YouTube Right to Demonetize Anti-Vax Channels?” Forbes Magazine. Available at: www.forbes.com/sites/masonsands/2019/02/25/is-youtube-right-to-demonetize-anti-vax-channels/#22f30f0014ce.

Spangler, T. (2019, March 2). “Amazon Pulls Anti-Vaccination Documentaries from Prime Video after Congressman’s Inquiry to Jeff Bezos.” Variety. Available at: www.variety.com/2019/digital/news/amazon-pulls-anti-vaccination-documentaries-prime-video-1203153487/.

Thompson, H. (2019, March 8). “Pinterest’s Block on Anti-Vaccination Content.” The Prindle Post. Availabe at: www.prindlepost.org/2019/03/pinterest-block-anti-vaccination-content/.

World Health Organization. (2013, February 19). “Six Common Misconceptions about Immunization.” World Health Organization. Available at: www.who.int/vaccine_safety/initiative/detection/immunization_misconceptions/en/index2.html.

Young, Z. (2018, November 28). “How Anti-Vax Went Viral.” Politico. Available at: www.politico.eu/article/how-anti-vax-went-viral/.

Authors:

Page Trotter & Scott R. Stroud, Ph.D.

Media Ethics Initiative

Center for Media Engagement

University of Texas at Austin

April 9, 2019

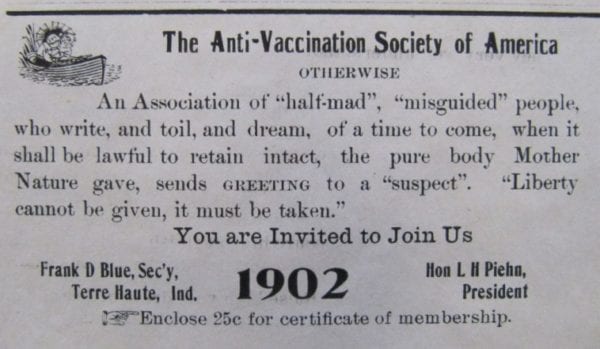

Image: Anti-vaccination advertisement from U.S. Newspaper, 1902

This case study can be used in unmodified PDF form for classroom or educational settings. For use in publications such as textbooks, readers, and other works, please contact the Center for Media Engagement.

Ethics Case Study © 2019 by Center for Media Engagement is licensed under CC BY-NC-SA 4.0