In 2016, the United Kingdom held a referendum on whether or not it would remain in the European Union (EU). 52% voted to leave, a move popularly known as “Brexit.” The driving force behind the effort was the political campaign group Leave.EU. Since the vote, the campaign has been denounced for using psychographic data (information about characteristics and traits such as beliefs, values, and preferences) to target voters (van Hooijdonk, 2018). While the legally controversial element of this strategy had more to do with campaign funding laws than data privacy, discussions quickly arose about the ethics of using psychographic data in political campaigns.

Such campaign methods are not new—Barack Obama’s U.S. presidential campaign collected digital data through Facebook using the application “Targeted Sharing,” which allowed it to access the friend lists of users who had agreed to the application’s terms (“How Does,” 2018). Since then, Facebook has changed its terms of service, so this method of data collection is no longer allowed. Yet campaigns found novel ways to use information collected online to design personalized advertisements aimed at swaying undecided voters. They did this by employing data analytics firms to “scrape” data (extracting the personal information of confirmed or potential voters through social media platforms) and subsequently creating highly personalized, “micro-targeted” advertisements. The analytics firm AggregateIQ used these methods in several pro-Brexit campaigns in the UK, much like Cambridge Analytica did for both Donald Trump’s and Ted Cruz’s 2016 presidential campaigns in the U.S (Contee & Lemieux, 2020).

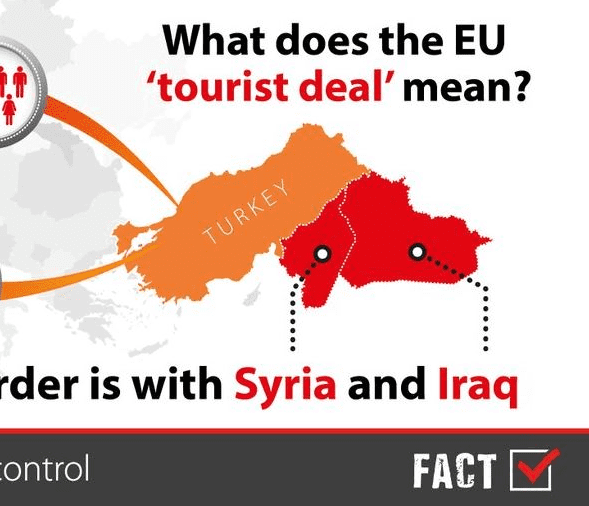

However, the use of psychographic data in campaigns, especially when such data are collected without the active consent of the user, poses important ethical questions: Do data analytics companies and political campaigns have an ethical responsibility to voters? In this fast-changing digital campaign space, where should political communicators draw the line between ethical and unethical use of voter data? To begin exploring these questions, consider how the Leave.EU campaign used personalized and micro-targeted advertisements to mobilize voters in the UK using the two ads displayed below concerning Turkey’s possible addition to the European Union:

Negotiations for Turkey’s EU membership had been going on for over 10 years at the time that the ads were designed, but by 2016, talks had stalled. By using personal data to identify voters who were likely to be wary of increased immigration, Leave.EU was able to design ads playing on voters’ fear of a future that, at the time, did not seem all that likely. By misrepresenting what was only a possibility (and not a reality)—that the UK would effectively share a border with Syria and Iraq—the campaign was able to sway a crucial group of voters. Was this unethical? While it is true that not all microtargeting pushes false or misleading information, in some cases it does. Yet isn’t the purpose of persuasive messaging to bring relevant and useful information to an audience? A microtargeted advertisement simply uses personal data to frame a political issue in a way that is likely to catch someone’s attention.

The key ethical issues to consider in these situations concern the content of a microtargeted advertisement, whether there is a line between being manipulative and being finely attuned to what media consumers want, and what level of consent a media user has given throughout this process. Using personal data can help political communicators design very effective advertisements. After collecting psychographic data and identifying what types of messages a media consumer may respond to, a political communicator can design content likely to confirm or challenge the consumer’s viewpoint. The extent to which a communicator believes that their advertisement can manipulate or control a media consumer might influence where they draw an ethical line, especially given that there is no consistent or clear data about how effective (or how manipulative) microtargeting strategies are.

Beyond considering the content of a political advertisement, communicators must take into account the consent between the media consumer, their data, and the analytics firms that scrape it. Many data analytics firms use social media platforms to scrape psychographic data. If users of these platforms willingly publicize personal information and legally consent to this information being used (as many platforms require users to do), is there any ethical dilemma in using such data? Are there specific properties of scraping data on digital platforms that pose ethical questions that are less relevant in a non-digital setting? For example, a researcher might stand in a grocery store and record simple demographic information and the shopping habits of store patrons for an hour, and then make inferences or predictions about what specific patrons may be interested in buying. Is this necessarily unethical? Does it differ from how researchers make predictions using data collected online?

On a broader scale, the lack of informed consent between the media consumer and data harvesting firms might pose a threat to voters and the integrity of democratic elections. While microtargeting might help voters become more aware of candidates and issues that they may not have come across before, many voters are unaware that their data are being harvested and used to design highly personalized advertisements. With close margins in many recent elections (such as Brexit in the UK and the 2016 US presidential election), the difference between a few hundred thousand votes out of millions cast matters.

Although it is unclear how effective microtargeting is compared to traditional TV and print advertisements, it is a unique method in that it targets voters individually. TV and print advertisements can really only speak to a general population for whom they might have narrowed down a few common characteristics or beliefs. Even direct mail, which candidates can target with more precision than ads, delivers the same content to hundreds or thousands of people. When voters whose data has been scraped are not aware of how and why they are being microtargeted, we must ask whether the method is fair. Investigative journalist Carole Cadwalladr warns that the use of psychographic data in microtargeted ads threatens the prospect of ever having a fair democratic election again, because highly targeted advertisements shown to only a small group of voters bypass and undermine “the shared political community essential for democracy” (Cadwalladr, 2017); essentially campaigns can be run at individuals rather than communities. Democracy relies on communities with shared values coming together to address shared problems. Microtargeting risks undermining the sense of community on which democracies rely. If microtargeting is able to distill the information voters have access to, it may be able to shape a voter’s beliefs.

Imagine that you are watching a sports game on TV with a group of friends. If a political or campaign advertisement is aired during the commercial break, you and your friends may engage in a discussion about that candidate or issue. You also might not—but the point is that you have all received the same information and can judge the contents and presentation of the message together or on your own time. However, if an advertisement is shown on Facebook to individuals who have no relation to each other and likely will never speak to each other about politics, they may end up with drastically different understandings of what an issue is about—or even different understandings of what the facts of the situation are.

Cadwalladr echoes a sentiment that political philosophers have discussed for centuries: in order for a democratic political system to function, voters must believe that they can engage in the democratic process on the same terms. This includes believing that, broadly speaking, they have access to the same information about political candidates and issues, as well as knowing that an election is not being rigged or meddled in, and they each have an equal chance to express their political beliefs. When voters receive drastically different information about candidates and issues, the democratic process may no longer include these crucial prerequisite conditions (Contee & Lemieux, 2020). Individual political bubbles get smaller and smaller until they only contain the person at whom the ad is targeted—without healthy political debate and discussion. Political communication has always relied on figuring out what an audience wants to hear and telling them you’re on their side (Aristotle, 2004, p. 194). Microtargeting and the nature of social media make such efforts more precise, and the methods are more opaque than ever before. This refinement and opacity may pose threats to democratic deliberation.

When asked about the ethics of using scraping and microtargeting strategies in political campaigns, AggregateIQ stated that it “does not undermine democracy by employing harmful, unethical techniques. It has never knowingly been involved in any illegal activity” (Devenport, 2018). Notice here the appeal to legality. While it is true that data privacy laws lag far behind the development of novel data harvesting technologies, ethical decision-making in digital political communication must extend beyond what is, at the moment, deemed legal or not.

In a world of big data, the information that consumers regularly and voluntarily generate is completely changing the landscape of political communication. At every step of the process, political communicators must consider how far they are willing to go to change a voter’s mind. At what point does a political advertisement blatantly manipulate a voter’s information? Has this information been obtained with the voter’s consent? Is the use of psychographic information capitalizing on a culture of misinformation, sensationalism, and alarmism in a way that harms voters?

Discussion Questions:

- What are examples of situations in which it is ethical to use personal data available online to design and target political ads?

- How much do political communicators’ intentions matter in designing online campaigns?

- Who is responsible for protecting voters online—social media platforms, governments’, or political communicators’?

- What might voters’ data privacy (or lack thereof) mean for future campaigns? If there is insufficient legal protection of voters’ data in the future, what ethical responsibility will political communicators have?

- How does microtargeted advertising differ from intelligently designed conventional advertising (e.g., TV ads targeted for one geographic or demographic area)? Would your ethical criticisms of the former exclude well-researched instances of targeted regular advertising?

Further Information:

Aristotle (2004). The Art of Rhetoric. trans. H.C. Lawson-Tancred. Penguin Classics.

Barnett, A. (2017, December 14). Democracy and the Machinations of Mind Control. The New York Review. https://www.nybooks.com/daily/2017/12/14/democracy-and-the-machinations-of-mind-control/

Cadwalladr, C. (2017, May 7). The great British Brexit robbery: how our democracy was hijacked. The Guardian. https://www.theguardian.com/technology/2017/may/07/the-great-british-brexit-robbery-hijacked-democracy

Contee, C, and Lemieux, R. (2020). “Identity Crisis: The Blurred Lines for Consumers and Producers of Digital Content,” In Political Communication Ethics: Theory and Practice, ed. P. Loge, Rowman & Littlefield.

Devenport, M. (2018, March 27). Aggregate IQ: DUP on whistleblower’s list. BBC. https://www.bbc.com/news/uk-northern-ireland-politics-43562388

“How Does Cambridge Analytica Flap Compare With Obama’s Campaign Tactics?” (2018, March 25). NPR. https://www.npr.org/2018/03/25/596805347/how-does-cambridge-analytica-flap-compare-with-obama-s-campaign-tactics

Shaw, M. (2018, Aug 30) Truly Project Hate: the third scandal of the official Vote Leave campaign headed by Boris Johnson. OpenDemocracy. https://www.opendemocracy.net/en/dark-money-investigations/truly-project-hate-third-scandal-of-official-vote-leave-campaign-headed-by-/

Tromble, R. (2021). Where Have All the Data Gone? A Critical Reflection on Academic Digital Research in the Post-API Age. Social Media + Society. https://doi.org/10.1177/2056305121988929

van Hooijdonk, R. (2018, August). What are psychographics? Understanding the ‘dark arts’ of marketing. https://www.richardvanhooijdonk.com/blog/en/what-are-psychographics-understanding-the-dark-arts-of-marketing/

Author:

Zoe Garbis

Project on Ethics In Political Communication

George Washington University

March 30, 2021

Images: Vote Leave / M. Shaw

This case study was produced by the Media Ethics Initiative and the Project on Ethics in Political Communication. This case can be used in unmodified PDF form for classroom or educational uses. For use in publications such as textbooks, readers, and other works, please contact the Center for Media Engagement.

Ethics Case Study © 2021 by Center for Media Engagement is licensed under CC BY-NC-SA 4.0