Creating digital discussion spaces that yield quality commentary has proven elusive for many newsrooms. Trolls, spam, incivility, misinformation – the list of problematic content appearing in news comment sections could go on. Politics, in particular, seems to bring out some of the worst in discussions.1

How could online political discussion be improved? It’s a question we asked in six different focus groups. The participants’ responses, discussed in a report released earlier this year, led us to create the experiment we describe in this report. Several focus group participants said that they wanted more facts and a better understanding of pro and con arguments about an issue before engaging in online political discussion.

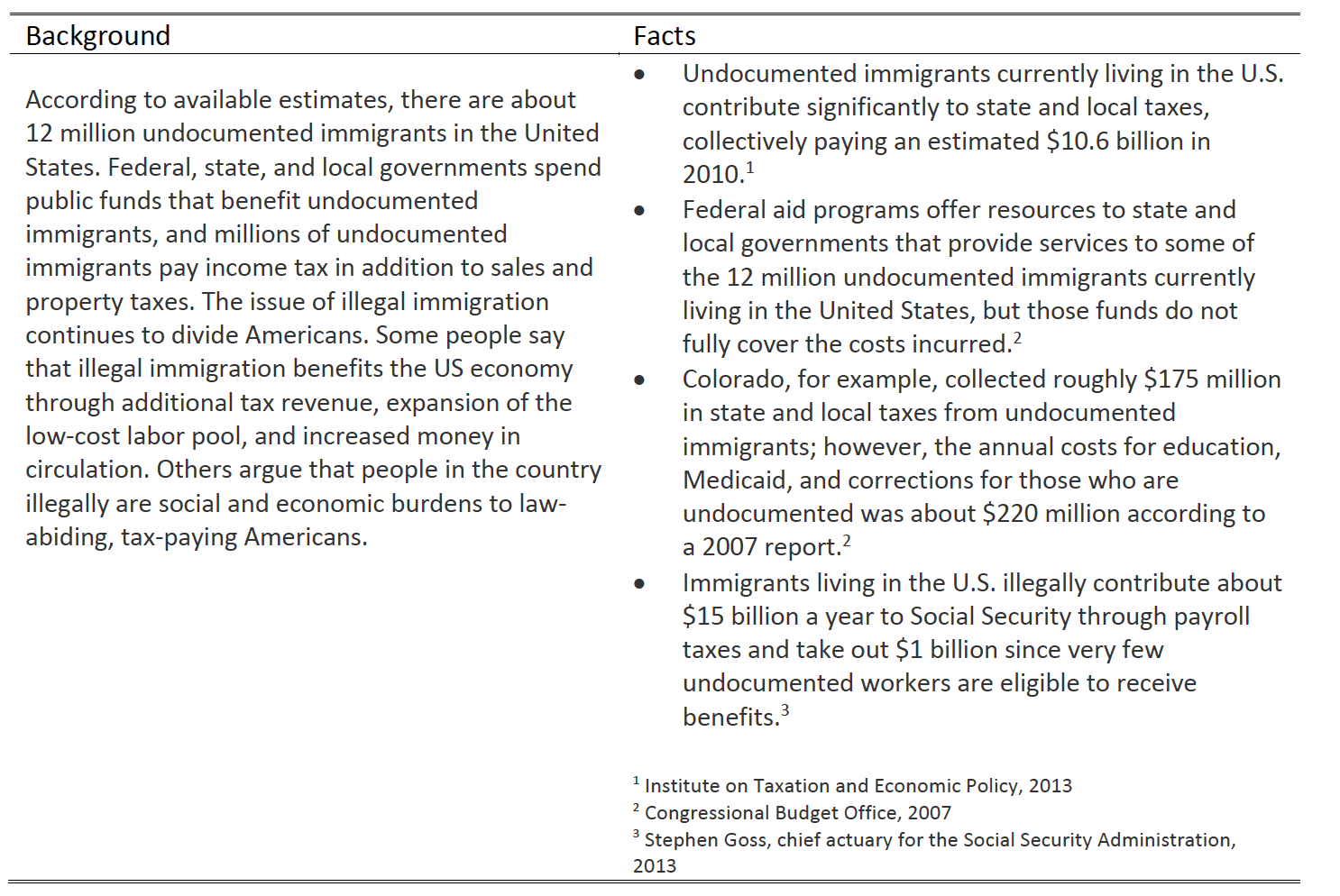

The experiment described below tested whether having (a) facts (verified information about an issue), (b) background information (a brief description of an issue and pro and con arguments), or (c) both affected people’s thoughts and behaviors. The issue chosen for this report is illegal immigration, a topic that is timely and for which factual information is available. We asked participants to look at an online discussion site we created for the purposes of this study and then to provide us with their reactions.

The Problem

Central to the health of democracy is people sharing their thoughts with one another about politics and public affairs.2 Engaging in political discussion helps people gain knowledge, allows them to teach or spread information to others, leads people to more considered opinions, and can be just as important for their understanding of the news as news exposure itself.3 Political discussion also informs people about ways to participate and influences their vote choices.4 Discussing politics and current affairs and the inherent disagreements that accompany this exchange are essential parts of American democracy.5

The Internet presents a unique opportunity for people to engage in political discourse. Online forums, blogs, news organizations’ comment sections, and social networking sites are just a few of the places that the American public can participate in discussions about public affairs. Like face‐to‐face communication, research has found that online deliberation can increase knowledge about important issues, efficacy, and willingness to participate in politics.6 Yet much online conversation falls short of the deliberative ideals of civil, well‐reasoned conversations among respected discussants.

Of particular concern, the nature of online spaces and the discourse they afford open the door for incivility. Incivility is often perceived when people are impolite or behave rudely, but it can involve actions other than disobeying the rules and practices of proper etiquette. Communication scholar Zizi Papacharissi argues that civility involves enriching democracy and its opposite, incivility, occurs when people engage in “behaviors that threaten democracy, deny people their personal freedoms, and stereotype social groups.”7 In examining the civility of discussion threads in an online political news group, Papacharissi found that although mediated communication encouraged heated discussion, most messages were in fact civil. When incivility does take place, research indicates that it does not just come from a few trolls or flamers but rather, incivility is distributed widely across commenters and higher rates of incivility in comments exist around stories that center on divisive topics and/or cite partisan sources.8 Identifying ways to improve the deliberative and civil nature of online spaces thus becomes a high priority.

Teams at the Center for Media Engagement and the National Institute for Civil Discourse conducted six focus groups across the country with the goal of a) better understanding how and why people currently use online spaces to access and interact with politics, news, and one another, and b) uncovering ideas for how to create more effective online spaces for political involvement and discussion. A total of 39 college-aged individuals from across the political spectrum with diverse interest in politics and political engagement participated in the focus groups. Among other things, participants shared that they: usually only talk about politics with friends and family, are reluctant to talk politics with people who disagree with them, view online spaces as somewhat treacherous for interacting politically, and use online platforms mostly to gather information when it comes to politics.

Participants voiced many reasons for bypassing opportunities to engage in online discussions with others, some of which included: lack of information, time, and/or interest; the time and effort required to work through large amounts of commentary, much of which is repetitive; a concern that online content tends to be biased and/or untrustworthy; and the absence of clear information or facts about the topic. Participants discussed the potential utility of clear and easily accessible information as a possible solution to some of these obstacles. Because multiple participants discussed the cumbersome task of sifting through articles and comment sections in order to distill and categorize the information and opinions, we conducted an experiment to investigate whether displaying bullet-point-style and/or background information would promote discussion engagement, civility, and consideration of opposing views.

Key Findings

The results, described in detail in this report, show the following:

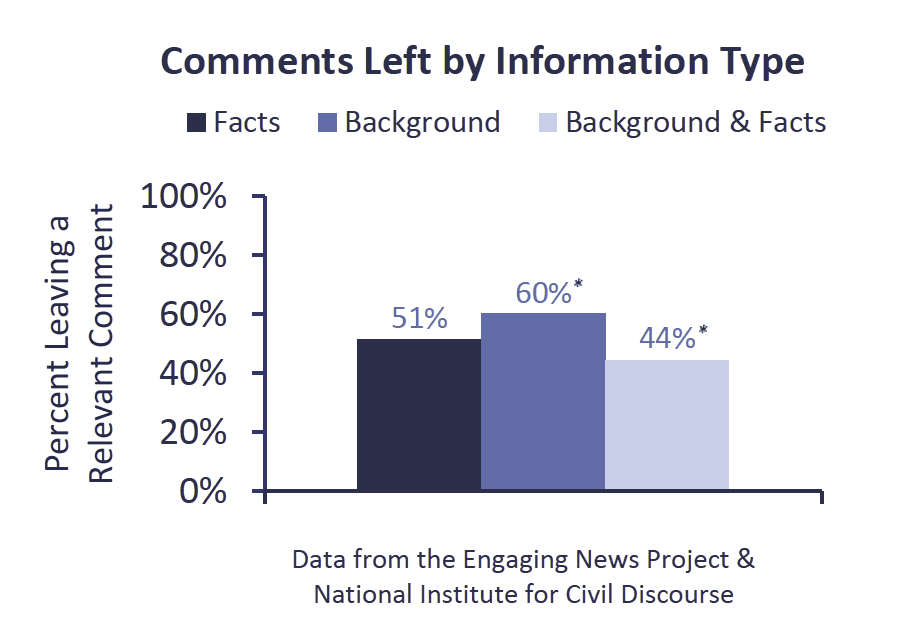

- 60% of study participants were willing to leave a comment when background information containing pro and con arguments was provided. By comparison, 51% did so when facts were included and 44% when both the background and factual information were presented.

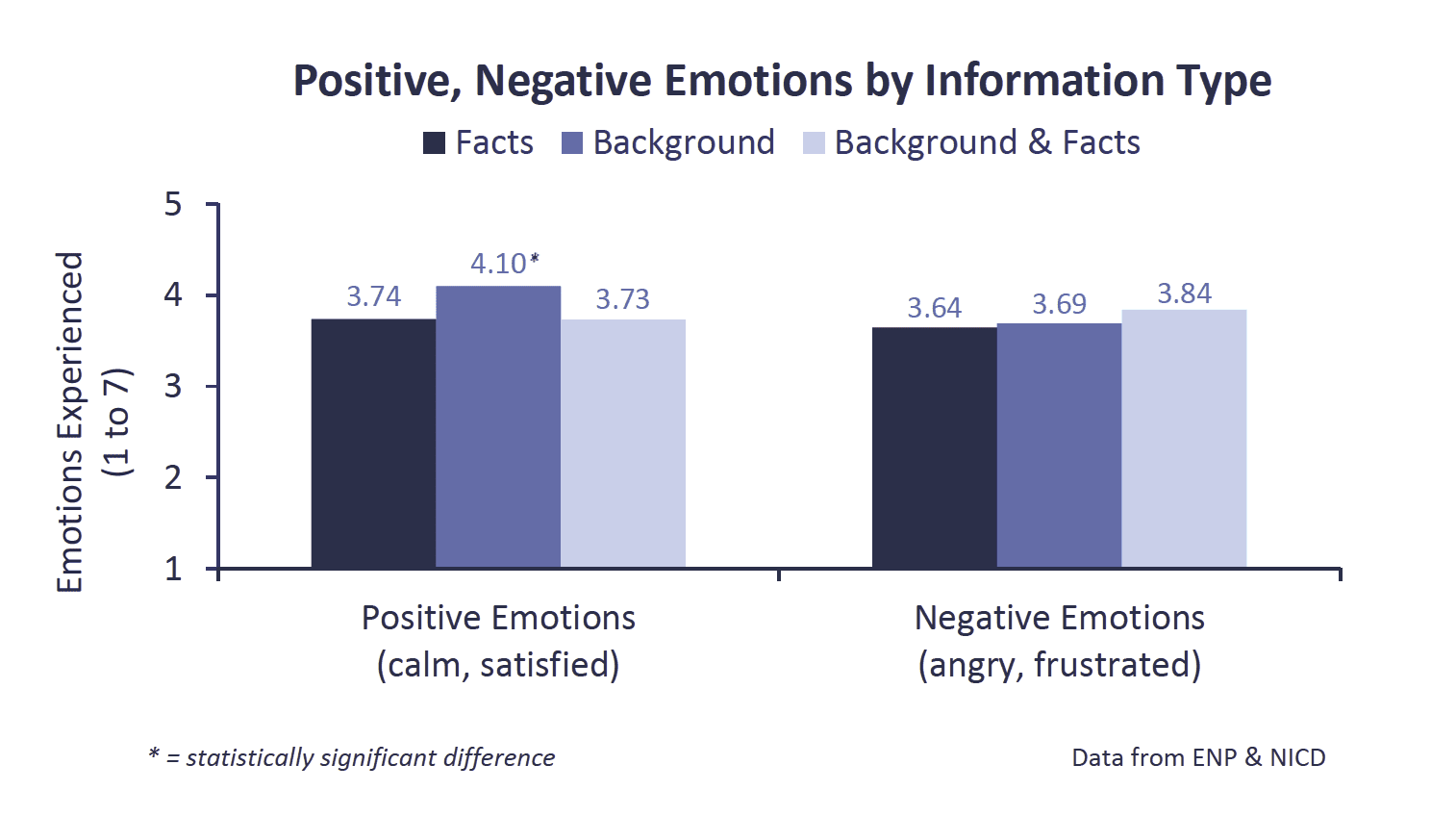

- Background information containing pro and con arguments made participants feel 7% calmer and more satisfied than factual information.

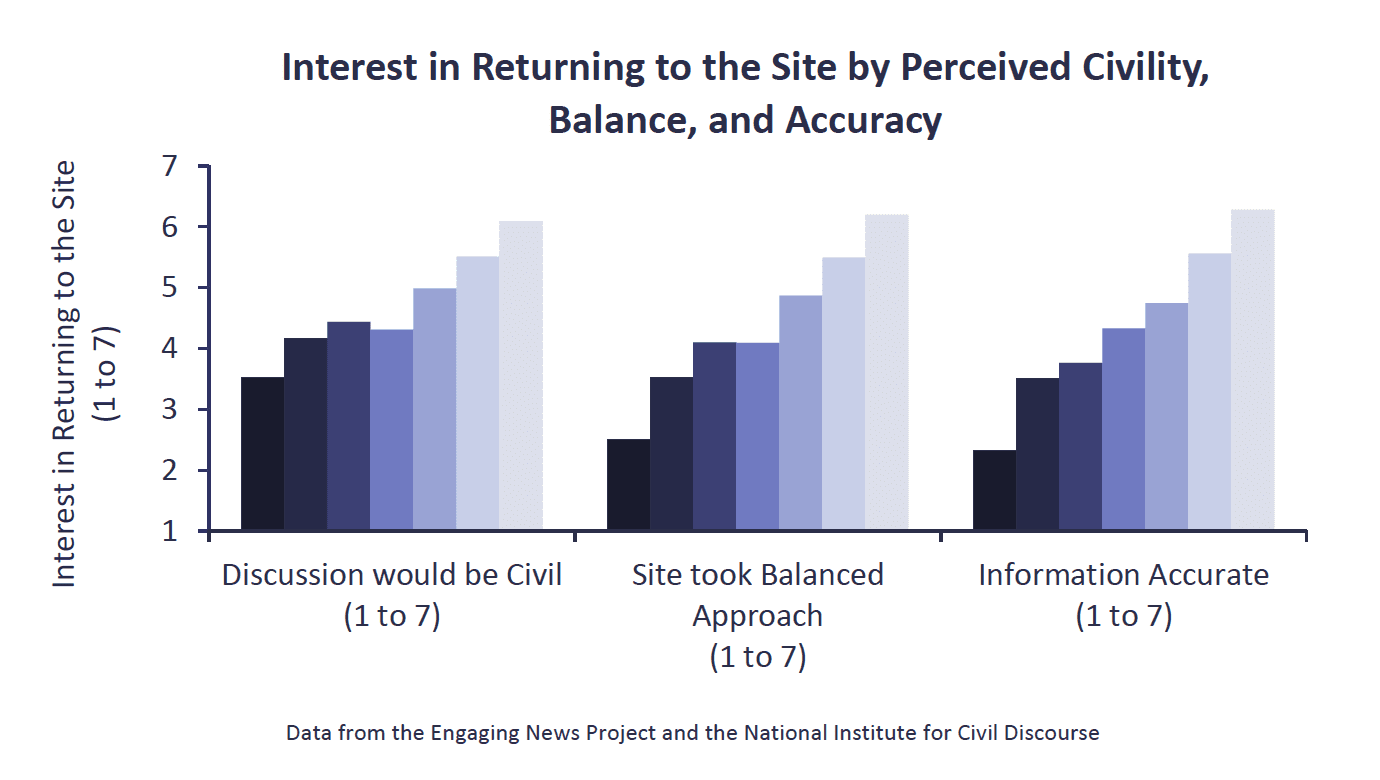

- When participants thought that discussion would be civil, they expressed more interest in returning to the site.

- When participants thought that the information was accurate and the site was balanced, they expressed more interest in returning to the site.

- Participants learned from both the factual and the background information provided.

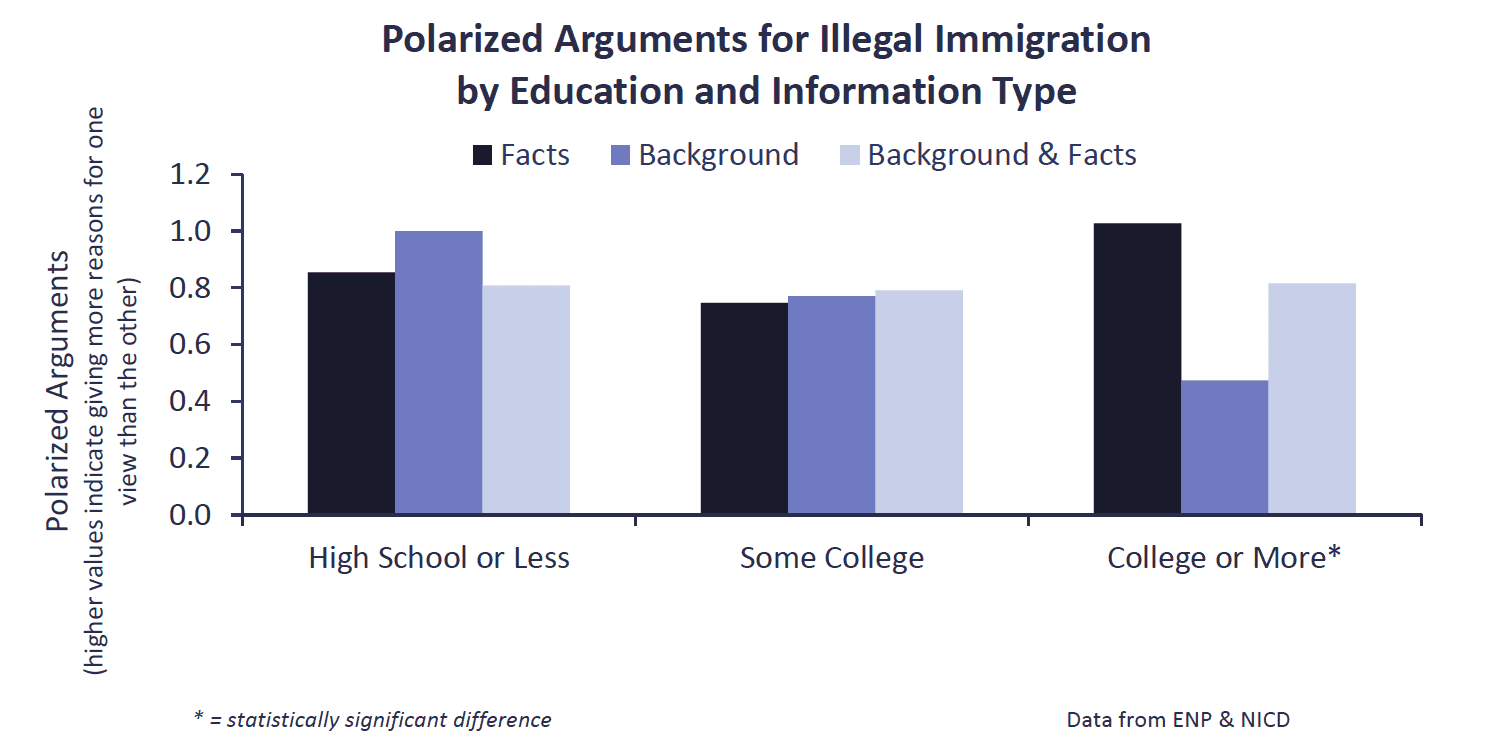

- Among participants with a college degree, background information yielded 18% less polarized arguments than the factual information. There were no differences among those with less education.

Implications for Newsrooms

The results provide some support for including background information containing pro and con arguments prior to online discussions. Study participants seeing the background information made more comments, felt more calm and satisfied, learned about the number of immigrants living in the U.S. illegally, and, at least among educated respondents, offered less polarized arguments compared to either those seeing factual information or those seeing background and factual information together.

Study participants learned additional details from the factual information, but the factual information did not yield the same emotional or attitudinal outcomes. The results also show that perceptions of civility, accuracy, and balance are related to wanting to return to an online discussion forum. Just as organizations bemoan incivility in the comment section, these results show that audiences do, too.

These results provide a starting point for additional research. Given that we only looked at one issue, it isn’t possible to know whether the findings will hold for others. Further, we know about the number of comments, not about their substance. Our next step will be to examine the substance of the comments to understand whether they varied depending on the presence of factual or background information.

The Study

Background Information Yields More Comments

When background information preceded the comments, study participants were more willing to leave a comment compared to when the background information was accompanied by facts.9

Sixty percent of those seeing background information left a relevant comment, or a comment that referenced the information, compared to only 44 percent of those seeing both the background and factual information. Fifty-one percent of those seeing the facts by themselves left a comment, but the difference was not statistically significant from the other conditions.

Factual Information Made Participants Less Calm, Less Satisfied

Across the three information types, participants were most calm and satisfied when the background information appeared by itself.10 When a list of facts preceded the comment section, whether alone or in combination with the background information, respondents reported feeling less calm and less satisfied compared to those seeing only the background information.

In terms of negative emotions, participants rated their anger and frustration similarly regardless of the information that they saw.

Civility, Balance Linked to Interested in Returning to the Site

Participants were asked to rate how much they: would be interested in returning to the site, thought that the discussion would be civil, thought that the website took a balanced approach, and believed that the information provided was accurate.

We found significant relationships among these measures. The more respondents thought that the discussion would be civil, the more they wanted to return.11 Further, the more that respondents thought that the website took a balanced approach to the topic, or provided accurate information, the more they wanted to return.

Participants rated the sites similarly on their interest in returning, impressions of the civility of the discussion, beliefs that the site took a balanced approach, and belief that the information was accurate.12 It didn’t matter whether participants saw the site with background information, facts, or both.

Participants Learned From the Information Provided

Study participants completed a five-question quiz about the information provided. Participants did learn, but what they learned varied depending on the information that appeared prior to the comment section.

More participants viewing the background information – whether by itself or in combination with the factual information – correctly identified the estimated number of undocumented immigrants in the United States than those viewing only the factual information.13 Although the estimated number of illegal immigrants was mentioned in both the background and factual information, it was more prominently displayed in the background information.

Those viewing the factual information – whether by itself or in combination with the background information – correctly identified that undocumented workers pay billions of dollars in taxes and that undocumented workers received approximately $1 billion in Social Security benefits each year more frequently than those viewing only the background information. These results are in keeping with the information provided – only the factual information included these details.

There were no statistical differences in how likely participants were to answer the remaining two knowledge questions correctly depending on the information provided. Both of the questions (the amount that immigrants contributed to the U.S. in state and local taxes in 2010 and gap between federal reimbursements and state costs for undocumented immigrants) were based on information contained within the factual information and not the background information.

Background Information Yields Lower Polarization Among Educated

We asked study participants about their immigration attitudes– whether they thought that illegal immigration helps or hurts the economy and whether immigrants strengthen or burden the country. Participants’ attitudes were similar, regardless of whether they saw the background, factual information, or combination of the two.14

We also asked respondents to list up to three reasons that someone would support, and up to three reasons that someone would oppose, giving immigrants living the U.S. illegally access to the same benefits and services available to U.S. citizens. We coded the responses to identify those that were sincere (a response with which someone holding that position would agree) and those that were insincere (a response that a person holding that opinion would find offensive). Neither the number of sincere reasons nor the number of insincere reasons varied depending on the information to which the participant was exposed.15

We next computed how many more sincere comments people made about one perspective compared to the other. For example, did a person list three sincere reasons in favor of one view on illegal immigration and only one reason in favor of the other perspective? If so, we gave them a 2 on our measure of polarization. The measure ranges from a maximum of 3, for a participant who listed three arguments for one view and no arguments for the other perspective, to a minimum of 0, where the participant would have listed an equal number of arguments for both sides.

As shown in the chart below, participants with a college degree gave less polarized arguments about illegal immigration when viewing the background information than when viewing the factual information. There were no differences among those who had not obtained a college degree.16

A similar pattern appears when looking at insincere arguments. Again, those who had obtained a college degree gave fewer insincere comments when they saw the background information than when they saw the factual information.17

Methodology

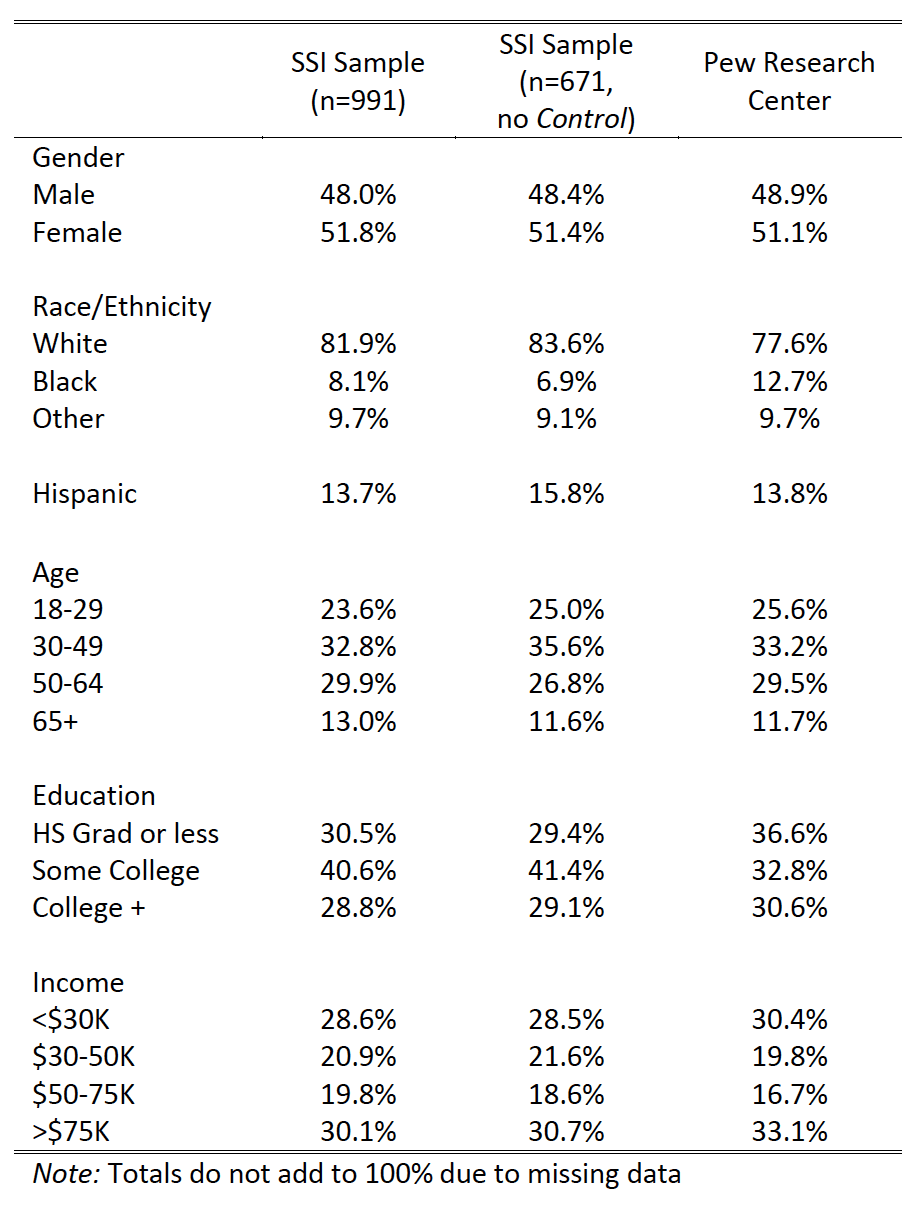

We conducted a between-subjects experiment to test the effect of the information preceding a comment section on commenting behavior, issue attitudes, emotions, and knowledge. Study participants were recruited via an online survey vendor, Survey Sampling International (SSI), which administered the experiment to a nationwide sample of 991 individuals in May of 2015.18

Study participants were randomly assigned to one of four conditions: (1) a Control condition, where they were not asked to look at a comment section and were not provided with any additional facts or background, (2) a Background condition, where study participants were provided with a paragraph describing the issue of illegal immigration and explicitly noting different views on the issue, (3) a Facts condition, where study participants were given facts about illegal immigration without any accompanying interpretation, and (4) a Background and Facts condition, where study participants were given both the background and factual information. Participants in conditions 2 through 4 were required to stay on the comment section page for 30 seconds before they were allowed to advance to the next page in the study.

Inspection of the data revealed that approximately 30 percent of respondents in the treatment conditions did not complete the study. The same was not true of respondents in the control condition, which resulted in different sample sizes per condition. A logistic regression including the measured demographic and political background characteristics revealed that Hispanic respondents, those who were older, and non-White respondents were more likely to be in the control group than they were in the treatment conditions (Nagelkerke R-square = .05, p < .05). As our checking indicated that the control group differed from the treatment groups, in this report, we present only differences among the three treatment conditions. As most of the questions motivating this research were related to differences among the conditions, this is only a modest limitation.

Participants in the treatment conditions were told “On the next page, we will ask you to look at a new online discussion forum. Please feel welcome to leave a comment. NOTE: The survey will allow you to move on to the next page after a reasonable amount of time has elapsed. Please take all the time you’d like to review the information.”

Although not randomly selected, the sample was selected to demographically match the demographic information of Internet users according to the Pew Research Center. Participants had to be U.S. residents who were at least 18-years- old. As shown in the chart, the sample roughly matched the Pew data, although respondents were less racially diverse, and more educated than the Pew sample.19

SUGGESTED CITATION:

Peacock, Cynthia, Curry, Alex, Cardona, Arielle, Stroud, Natalie Jomini, Leavitt, Peter, and Goodrich, Raquel. (2015, June). Background Information & Facts. Center for Media Engagement. https://mediaengagement.org/research/background-facts-prior-to-comments/

- Coe, K., Kenski, K., & Rains, S. A. (2014). Online and uncivil? Patterns and determinants of incivility in newspaper website comments. Journal of Communication, 64(4), 658–679. doi:10.1111/jcom.12104 [↩]

- Barber, B. (1984). Strong democracy. Participatory politics for a new age. Berkley & Los Angeles: University of California Press; Dewey, J. (1939/1988). Creative democracy: The task before us. In J. A. Boydston (Ed.) The later works of John Dewey (vol. 14, pp. 224–230). Carbondale: Southern Illinois University Press [↩]

- Brosius, H. B., & Weimann, G. (1996). Who sets the agenda agenda-setting as a two-step flow. Communication Research, 23(5), 561-580. doi:10.1177/009365096023005002; Eveland, W. P., & Thomson, T. (2006). Is it talking, thinking, or both? A lagged dependent variable model of discussion effects on political knowledge. Journal of Communication, 56, 523-542. doi:10.1111/j.1460-2466.2006.00299.x; Katz, E., & Lazarsfeld, P. F. (1955). Personal Influence. New Brunswick: Transaction Publishers; Robinson, J., & Levy, M. (1986). The main source: Learning from television news. Beverly Hills: Sage; Wyatt, R. O., Katz, E., & Kim, J. (2000). Bridging the spheres: Political and personal conversation in public and private spaces. Journal of Communication, 50, 71-92. doi:10.1111/j.1460- 2466.2000.tb02834.x [↩]

- Huckfeldt, R., & Sprague, J. (1995). Citizens, politics, and social communication: Information and influence in an election campaign. New York: Cambridge University Press; McLeod, J. M., Scheufele, D. A., & Moy, P. (1999). Community, communication, and participation: The role of mass media and interpersonal discussion in local political participation. Political Communication, 16, 315-336. doi:10.1080/105846099198659 [↩]

- Gutmann, A., & Thompson, D. (1996). Democracy and disagreement. Cambridge: Belknap Press. [↩]

- Min, S. J. (2007). Online vs. face‐to‐face deliberation: Effects on civic engagement. Journal of Computer‐Mediated Communication, 12(4), 1369-1387. doi:10.1111/j.1083-6101.2007.00377.x [↩]

- Papacharissi, Z. (2004). Democracy online: Civility, politeness, and the democratic potential of online political discussion groups. New Media & Society, 6(2), 267. doi: 10.1177/ 1461444804041444 [↩]

- Konnikova, M. (Oct. 24, 2013). The psychology of online comments. The New Yorker; Coe, K., Kenski, K., & Rains, S. A. (2014). Online and uncivil? Patterns and determinants of incivility in newspaper website comments. Journal of Communication, 64(4), 658–679. doi:10.1111/jcom.12104 [↩]

- All comments left were reviewed to determine whether they were relevant comments or not. Irrelevant comments included statements like “No comment” or “Illegal immigration.” Two coders evaluated 20% of the data to ensure that the coding was reliable, which it was (Krippendorff’s α = 0.93). Of the 442 comments recorded, 79% were relevant. An ANOVA showed that there was a significant difference across conditions in terms of the number of relevant comments left (F(2,668) = 5.67, p < .01). This result replicates if we include all comments, regardless of whether or not we coded them as relevant (F(2,668) = 7.23, p < .01). Condition did not predict leaving irrelevant comments (F(2,668) = 0.25, p = .78). Inspection of the means using a Sidak correction shows that Background was significantly different from Background and Facts (p < .01) but not Facts (p = .18). Facts was not significantly different from Background and Facts (p = .39). [↩]

- An ANOVA showed that there was a significant difference across conditions in terms of the experience of positive emotions (F(2,668) = 4.98, p < .01), but not in terms of the experience of negative emotions (F(2, 668) = 0.90, p = .41). Post-hoc comparisons using a Sidak adjustment for multiple comparisons revealed that the Background condition resulted in more positive emotions than either the Facts condition (p < .05) or the Background and Facts condition (p < .05). There was no difference in the experience of positive emotions between the Facts and the Background and Facts conditions. There was some indication that education moderated the results, when including education as a continuous predictor, the relationship was significant (F(2, 664) = 3.26, p < .05). Including education as a trichotmized variable yielded a marginally significant result (F(4, 661) = 2.23, p = 0.06). Inspection of the results revealed that among those with a high school degree or less, the background information made them significantly calmer and more satisfied than the background information and facts. The provision of just the factual information yielded a mean rating of calm and satisfaction that was in between the other condition means and was not significantly different from either. For those with a college degree or more, the background information made them significantly calmer and more satisfied than the background information. The provision of both types of information yielded a mean rating of calm and satisfaction that was in between the other condition means and was not significantly different from either. For those completing some college, the background information again yielded the highest rates of calm and satisfaction, but the differences were not significant. [↩]

- The correlation between interest in returning to the website in the future and thinking that the discussion would be civil was .42 (p <.01). The correlation between interest in returning to the website in the future and thinking that the website took a balanced approach to the topic was .55 (p < .01). The correlation between interest in returning to the website in the future and thinking that the website was accurate is .54 (p < .01). [↩]

- An ANOVA showed that there were no differences across conditions in participants’ interest in returning (F(2, 650) = 0.01, p = .99), impressions of the civility of the discussion (F(2, 650) = 1.33, p = .27), beliefs that the site took a balanced approach (F(2, 650) = 1.38, p = .25), and belief that the information was accurate (F(2, 650) = 0.53, p = .59). [↩]

- An ANOVA showed that there were significant differences across conditions in the percentage of participants who knew the number of undocumented immigrants (F(2, 595) = 7.21, p < .01), who knew that undocumented immigrants pay taxes (F(2, 595) = 15.51, p < .01), and who knew that undocumented immigrants take out less money than they put in to Social Security (F(2, 595) = 12.51, p < .01). There were not, however, differences in knowing about money paid in state in local taxes (F(2, 595) = 2.69, p = 0.07) or in the costs incurred by states (F(2,595) = 2.11, p = .12). Post-hoc tests using Sidak corrections for multiple comparisons confirm that more participants in the Background and Facts and Background conditions knew the number of undocumented immigrants compared to the Facts condition. Further, more participants in the Facts and Facts and Background conditions knew that undocumented immigrants pay taxes and take out less than they put into Social Security than participants in the Background condition. [↩]

- We asked four questions with Likert-style response options (1 to 7), using question wording similar to the Pew Research Center and the Public Religion Research Institute: (1) Immigrants today strengthen our country because of their hard work and talents; (2) Immigrants today are a burden on our country because they take our jobs, housing and health care; (3) Illegal immigrants mostly help the economy by providing low cost labor; and (4) Illegal immigrants mostly hurt the economy by driving down wages for many Americans. The responses were strongly related (Cronbach’s α = .81) and were averaged to form a single measure (Range = 1 to 7, higher values indicating greater support for immigrants, M = 3.74, SD = 1.46). There were no differences across the conditions (F(2, 667) = 0.97, p = .38). [↩]

- Two coders independently coded 20 percent of the cases and achieved strong reliability (Krippendorff’s α = .83). Experimental condition did not predict the number of sincere supportive or oppositional reasons given (F(2,668) = 1.34, p = .26; F(2,668) = 1.91, p = .15). Experimental condition also did not predict the number of insincere supportive or oppositional reasons given (F(2,668) = 0.32, p = .73; F(2,668) = 0.61, p = .54). We also evaluated whether there were interactions between the experimental condition and respondents’ political partisanship in predicting the number of supportive or oppositional reasons. The differences again were not significant. [↩]

- The interaction between education and condition was significant when predicting providing sincere polarized arguments using an ANOVA (F(4,661) = 3.03, p < .05). The interaction also was significant when predicting insincere polarized arguments using an ANOVA (F(4,661) = 3.00, p < .05). For both, post-hoc tests with a Sidak correction revealed that the significance stemmed for a difference between those with a college degree or more education seeing either the factual information by itself or the background information by itself (p < .05). [↩]

- The interaction between condition and education also was significant in predicting the total number of insincere arguments, regardless of whether they were left in support of or opposed to additional rights for immigrants living in the country illegally (F(4,661) = 2.51, p < .05). Inspection of the means suggests that those with a high school degree or less were more likely to list insincere arguments in the background condition (M = 0.87) than in the facts and background condition (M = 0.50), but the difference was only marginally significant (p = 0.09). Insincere arguments in the factual condition fell in the middle (M = 0.60). Given the marginally significant result and the exploratory analysis, further work needs to be done to replicate this finding. [↩]

- Responses were collected from 1153 respondents in total. We removed data from respondents who left junk data in the open-ended fields, e.g. a string of letters (7%), those who said that they were not able to see the website (5%), and those who gave the same response when asked about the comment section (straight-lining, 1%). We note, however, that when replicating the analysis including all respondents, results were unchanged. [↩]

- We examine whether any of the demographic attributes varied across conditions. Education was higher among the Facts group than the other two (F(2, 667) = 3.90, p < .05). We examined the results with education as a covariate and found no differences. There were some instances in which education moderated the results, as we discuss elsewhere in the report. [↩]