SUMMARY

Structured science engagement training is one of the main ways in which scientists and researchers build their confidence, skills, and knowledge for communicating and engaging with members of the public. Investment and capacity-building from UK funding bodies and universities over the past two decades has led to an expansion and normalization of science engagement training.

To help understand the impact and effectiveness of training in the UK, and what trainers and funders in the United States could learn from it, the Center for Media Engagement interviewed 24 science engagement trainers about their approaches, content, goals, trainees, evaluation, and best practices.

We found that trainers in the UK:

- Want to make a difference

- Are self-directed and don’t feel a sense of professional community

- Operate in an established, but sometimes dysfunctional, market

- Are reaching limited sections of the research community

- Choose outcomes that are measurable but possibly too “safe”

- Choose content that is largely transferrable and provides a baseline for future development

- Know what is popular, but know less about what works

- Incorporate practice into their training

- Had a challenging but unexpectedly busy year in 2020

- Want more structure and recognition, but not necessarily in the form of frameworks or accreditation

These findings are explored in detail in the body of the report. We identified three areas of development for science engagement training: capacity-building for long-term evaluation of training, and continued development of researcher engagement skills; exploring a model to link the skills acquired in training and to societal outcomes; and a much greater focus on equality, diversity and inclusion (EDI) in who receives training, training environments, and the types of communication and engagement that are featured in training content.

INTRODUCTION

Science engagement training continues to expand across the UK and is now commonplace in universities, learned societies, sector networks, science centres, and festivals.

In recent years, leaders from across the scientific community have encouraged scientists to communicate and engage with the public about science. For many scientists, funders have made science engagement a condition of funding, or it has become an expectation of their institution. Multiple, sometimes conflicting, motivations appear to drive growth in science engagement, ranging from institutional factors such as funding or assessment to a sense of duty to society.1 2

Training is one way through which scientists can gain the mindsets and skills needed for effective science engagement. Training aims to improve the likelihood that scientists will go on to engage with the public; that their engagement efforts will be effective in bringing about societal benefits; and that, in time, scientists themselves will contribute to the body of knowledge underlying science engagement practice.

Science engagement training has become well-established in the UK since the turn of the century.3 It has been supported with centralized funding, capacity-building, and incentives for scientists to take part.4 There are several dedicated science communication qualifications (e.g., Masters, or Post-Graduate Diplomas), plus many opportunities for scientists to attend training provided by their organizations or by an external trainer. Recent research has looked at the uptake of training and public engagement activities,5 experimented with training delivery formats,6 7 and identified the key skills for science communication.8 Yet there has not been a comprehensive study of the UK science communication training landscape in the past decade.

By contrast, science engagement training in the US has expanded more recently. Both supporting growth of science engagement by creating better incentive structures and embedding of science communication training in research institutions are identified as priorities for the field.9 Our research team has published research into the US science communication landscape,10 11 12 and we were interested in points of comparison between the two contexts.

Undertaken as part of a US-UK Civic Science Fellowship funded by the Rita Allen Foundation, and hosted by the US-UK Fulbright Commission and the Center for Media Engagement at The University of Texas at Austin, this interview study provides an opportunity to build relationships between US and UK science communication trainers, and to improve training practices internationally.

Definitions

- We describe all the people interviewed for this project as “trainers”. We sometimes refer to them collectively as the “training community” although we try not to generalize. We also try not to identify the specific institutional settings that the insights in this report refer to, since this could compromise the anonymity of trainers.

- We usually describe those who attend training as “people” or “participants”. Mostly they are scientists or researchers, but on occasion, other groups are involved (such as teachers or festival volunteers) that do not fall into those categories.

- We usually describe the people who act as intermediaries between the trainers and the participants as “clients” or “organizers”. Due to the nature of their roles, some interviewees would also fall into this category, but we always refer to them as trainers for the purposes of this report.

- Throughout the report, we refer to science engagement and the science engagement sector, which encompasses anyone working on having or facilitating conversations about science whether paid or voluntary.

- We use the abbreviation EDI to refer to equality, diversity, and inclusion, because this is the term most commonly used in UK contexts. It can be considered interchangeable with the US term DEI (diversity, equity, and inclusion).

KEY FINDINGS

In this section, we present short descriptions of the ten key findings from the research. Each of these is explored in more detail later in the report and supported by short excerpts from our interviews.

-

Trainers want to make a difference

They feel a duty to society and can clearly articulate the benefits they hope to achieve through training, but sometimes feel these don’t align with the motivations of clients and participants.

-

Trainers are self-directed

Most have non-linear career routes, have few relevant professional development opportunities, and often do training alongside other work. They report mixed levels of interaction and feelings of community.

-

Training has an established, but sometimes dysfunctional, market

Trainers are consistently able to get work, particularly from universities, but they often have to adapt to please or sometimes compete with their core clients.

-

Training is reaching limited sections of the research community

Participants are mostly self-selecting early career researchers, and trainers have few opportunities to influence who is participating.

-

Training outcomes are measurable but possibly too “safe”

Trainers are keen to incorporate the goals and motivations of participants, but this can result in outcomes that serve the interests of the research community rather than wider society.

-

Training content is largely transferrable and provides a baseline for future development

It typically gives a flavor or baseline level of knowledge and skills relating to science engagement, and introduces frameworks for applying them in practice.

-

We know what is popular, but we know less about what works

Evaluation typically captures and incorporates feedback about participant experience, but trainers lack the resources and capacity for monitoring longer-term impact.

-

Practice is part of training

Trainers place a strong emphasis on identifying practical opportunities for engagement, though post-training support is mixed. There are some embedded approaches where participants deliver activities alongside training.

-

2020 was challenging but unexpectedly busy

Trainers were quick to adapt their activities and sustain their income during COVID-19 lockdowns, and are reflective about the societal shifts we are going through.

-

Trainers want more structure and recognition, but not necessarily in the form of frameworks or accreditation

They are naturally reflective and seek out best practices. Some felt a conversation about quality frameworks was ongoing and still unresolved, whereas others had given it little thought.

RECOMMENDATIONS

Reading through our key findings, we identified four major recommendations that could make training more strategic, impactful, inclusive, and connected. We found broad support from our study participants for each recommendation, a general desire for more coordinated approaches to science engagement training, and a bolder vision for what training can achieve.

All of these recommendations could be implemented quickly, and:

- Support different approaches, delivery methods, platforms, and motivations

- Allow trainers to retain full autonomy over their content and IP

- Are not dependent on major funding or policy changes

Build Capacity for Long-Term Evaluation of Training

Links to findings 5, 7, 10

Our results show that little effort is directed at assessing the effectiveness of training, and there is almost no work being done to build a picture of the long-term effects of training on participants’ behavior and the degree to which they continue to develop science engagement skills through real-world practice. As the approaches and delivery methods of training appear to become more advanced, the capacity and funding to evaluate their effectiveness lags behind.

Although our study showed a mixed appetite for a shared evaluation framework, we believe there is a need for trainers, and organizers or clients, to identify common short-, medium-, and long-term goals.

Explore a Logic Model for Training

Links to findings 1, 3, 5, 6

Our research identifies an opportunity for trainers to test or to create a resource for participants to better plan towards long-term communication goals. At the moment, training often directs participants to the engagement opportunities that are available, and/or the outcomes that are most valued by clients. We suggest that an outcomes-led approach such as theory of change could be a useful starting point for linking societal goals for science engagement with outcomes, knowledge, and skills that can be supported by training.

Additionally, it could strengthen the case for training by helping:

- trainers to define and articulate the purpose of their work

- participants to plan their activities and development

- clients and organizers to broaden their approach to training

Prioritize Equality, Diversity, and Inclusion (EDI)

Links to findings 4, 6

Our research shows limited access to demographic data of training attendees and mixed confidence with EDI topics. It is unclear whether science engagement training spaces could be considered inclusive and welcoming environments to people of all backgrounds. Given the mixed experience and importance placed on EDI by participants in our study, there should be opportunities for peer learning on some basics of inclusive practice without compromising training content (discussed in finding 6). Furthermore, there are many tools, policies, and processes that trainers and clients could use to be more proactively inclusive.

In appendix 2 we have identified a list of actions trainers can take to be effective and visible allies and help ensure training is a safe space for people of all backgrounds.

Support Interaction between Trainers

Links to findings 2, 3, 9, 10

We found that trainers see a lot of value in networking and interaction, but this is currently happening informally. The US-based Science Communication Trainers Network was set up to address a collective desire for more interaction between trainers, and our research found broad support for a similar or parallel initiative in the UK.

Another simple step could be to create a centralized list of science engagement training providers so that opportunities for work can be distributed more fairly and are less reliant on personal connections.

DETAILED RESEARCH FINDINGS

Finding 1: Trainers Want to Make a Difference

We began by understanding the outcomes that matter most to trainers, and what ultimately motivates them to deliver science engagement training. We found that trainers feel a strong duty to society and can clearly articulate the wider benefits they hope to achieve through training.

Multiple studies have shown the varied drivers of science engagement in the UK: there is no clear agenda, goal, or objective that is shared across people and organisations, and our study shows that this appears to carry through to the training community. Although motivations were mixed, trainers commonly identified with one of two long-term goals:

- To make people feel more informed and confident with science and to encourage them to use it to make better personal decisions

- To give people more voice in research and on the science-related decisions facing society

Trainers typically described these goals in terms of processes or skills that they would incorporate into their training, for example: “create dialogue”, “share ideas”, and “get involved in public discussions”.

Another motivation for trainers was financial; training is a consistent income generator with an established market. So, by definition, some of their goals and motivations will be linked with the goals and motivations of their core clients. Universities provide by far the largest market for science engagement training, and the growth in demand has been supported over the past twenty years by funding, successful case studies, frameworks, and assessment.

We found that trainers were very aware of the context in which they operate, and the funding that supports their work. The key policy changes and initiatives cited by trainers were:

- In 2002 Sir Gareth Roberts reported that there is a mismatch between graduate and postgraduate skills, and the skills required by employers.13 He recommended that all postgraduate students and early career researchers (ECRs) should have a clear development plan with a minimum training requirement. Universities received an extra £20 million from the government to support this, and many researchers received science communication training or related development activities as a result. More recently, a common model is Centre for Doctoral Training (CDT) or Doctoral Training Partnership (DTP) where all PhD students have designated training time and budgets.14 Both of these policy changes played a part in normalizing training, and directing it towards early career researchers rather than those in senior positions.

- In 2008, six universities were awarded Beacons for Public Engagement grants to support and build capacity for public engagement over four years. Following on from the Beacons, eight public engagement Catalyst universities were awarded funding between 2012 and 2015 to take learning from the Beacons and ‘embed a culture of public engagement’. The National Coordinating Centre for Public Engagement was set up in 2007 to support those projects. All of the Beacons and Catalysts included staff training and development in public engagement with research, providing a set of case studies and norms for other universities to follow.15

- In 2014, the UK was the first country to include ‘impact of research on society’ in a university’s submission to the Research Excellence Framework (REF). All researchers are now expected to be able to communicate the impact of their work, defined as ‘an effect on, change or benefit to the economy, society, culture, public policy or services, health, the environment or quality of life, beyond academia.’ REF also includes an assessment of the research environment that universities provide.16 Between 2009 and 2020, Research Councils UK, the main government body that awards research funding, required applications to include a “Pathways to Impact” statement in grant applications.17

“REF is something that is coming up more and more now as well. So, it’s a grading system of universities across the UK. And as people know that as a thing and people are driving towards the impact agenda has kind of taken over. So that’s a big part. Early days, communication, delivery of information through into engagement. And we’re currently in the era of impact demonstrable contributions.” (Interview 14)

We found that some trainers feel there is a tension between their motivations and the motivations of the people that organise and attend training. Where trainers have autonomy over their content, they are often still held back by institutional processes and expectations that value these measures over societal change.

“Often, it’s less driven by social goals and more driven by what the funder says they want, but I suppose that’s just natural, that’s just how business and universities work. There’s a funder, they want something that has to be delivered, whereas, I suppose that’s where we have a slightly different approach. [Redacted] is a social enterprise and we have very clear social goals. We have to fund that because we’ve got to pay our staff, but the driver is trying to make change.”

We discuss the processes of trainer-university interaction more in finding 3. Throughout this study, we see examples of this tension coming into play. It is clear that a need to incorporate the motivations of universities is driving how training is organised, who receives it, what content it covers, how it is evaluated, and what outcomes it enables.

Comparison with the United States

Trainers in the United States describe their desire for training to result in broadly defined impacts on society, but lean towards “educating the public” as their ultimate goal.10

The infrastructure and funding around science communication training in The United States has expanded more recently. Frameworks such as NSF Broader Impacts are much earlier in their implementation.18 Creating better incentive structures and embedding science communication training in research institutions are identified as priorities for the field. Therefore, it appears that the training landscape is less defined by policy changes and external drivers.

Finding 2: Trainers are Self-Directed

We wanted to understand the career routes of trainers, how they interact with one another, how they build skills, and to what extent they see themselves as a defined community within science engagement. We found that trainers did not take a linear route into the career, and often do training alongside other activities.

Most trainers are educated to postgraduate level and have a lot of professional experience, but fewer have had any formal training in how to train. Instead, they usually start training as a secondary activity alongside their other work, whether that is consultancy, event delivery, administrative, leadership, or research responsibilities. Therefore, access to professional development is mixed. For many, it’s an “apprenticeship model” where they learn by doing, pick up skills when they have the opportunity to do so, and work alongside others.

When asked to identify training that had been particularly helpful, many trainers mentioned that their biggest opportunities to improve and develop come from reflective practice, and from training in different contexts. Additionally, many more trainers referenced courses or experiences that happened early in their careers than development opportunities later in their careers. Trainers in universities tended to have many more opportunities for professional development, particularly when they have teaching responsibilities as part of their role.

Interaction between trainers tends to happen informally or through professional networks and conferences that are not related to training. Many trainers commented that although they know a lot of people who do training, they mostly talk to them about other things. There are also interactions when training alongside each other, or when someone’s role involves both delivering and organizing training. A smaller number do not feel connected to other trainers, and these tended to focus on media engagement, and often had a background in journalism rather than research or engagement. Very few trainers mention interaction with science engagement researchers, which is indicative of a research-practice divide.

“I think in the U.K., the vast majority of the people I know who do science engagement training, also do other things. There’s a lot of people who do four days of like public engagement work in the university, and one day they might be training, and there’s lots of performers who do training. Yeah. All sorts. So, I’d say like lots of the people that I used to book for [redacted] do training. So, I feel like I may not have met many of them because they’re trainers. But I feel like I probably know… If I had to hazard a guess I’d say like, I know maybe pushing half of the people who are regularly delivering training in the U.K.”

Many trainers suggested that a more formal network of science engagement trainers would be very valuable.

Comparison with the United States

Until recently, most trainers said they had infrequent interaction with other trainers but expressed near unanimous desire for more frequent and consistent opportunities to interact with other trainers, with the aim of sharing evidence-based training and evaluation practices.10 11 12 The Science Communication Trainers Network19 was established in 2018 to provide regular structured opportunities to interact, professionalize the field, and broaden participation.

Finding 3: Training Has an Established, But Sometimes Dysfunctional, Market

We wanted to understand how science engagement trainers find work, how they interact with universities, how competitive the market is, and how secure they feel in their employment. We found that trainers are consistently able to get work, particularly from universities, but that they often have to adapt to please or sometimes compete with that market.

Most trainers are not organizing training themselves or offering training “direct to consumer”. Instead, they are booked by an intermediary representing a university, or less commonly, a company or membership body wanting to train their researchers, staff, or network. Trainers described these client relationships as forming organically and quickly becoming well-established. It is rare that trainers described having to regularly pitch for work; instead, it builds up through word-of-mouth or soft advertising online. The current economics of the training community relies on these established links, and this means that clients play a large part in organizing and setting success measures for training.

Generally, clients and organizers value the external perspectives that trainers offer. Even when there is capacity to train in-house, or a dedicated teaching faculty, clients often try to supplement their offer with trainers from outside their organizations. However, both trainers and people who book training would like the logistical side of the relationship to be more functional, with the payment process being a consistent source of tension. Additionally, some external trainers feel their expertise is being underused, or they are just brought in to “take peoples’ problems away”.

“It would give public engagement some clout somehow if it was taken more seriously by those groups. So that’s one thing. Then the second thing is that I’m hired in by public engagement professionals most of the time, and they do it on a needs-must basis. So, I don’t feel very connected to the academic structures per se. It’s always just on a case of need. So, is that good? I think I’ve worked with most people that have hired me before in some capacity, so they’re doing it because they trust me and they’re doing it because they don’t have capacity themselves. I’m not sure that indicates a good connection between the freelance and academic worlds. It’s just happenstance.”

Trainers acknowledge that there is competition for work, but are keen to state that this is rarely negative. There is a general awareness of who is doing what, and occasionally a tender or freelance opportunity will heighten the sense of competition. Trainers in all contexts are mindful not to create more feelings of competition, sometimes to the point of deliberately avoiding seeing any other training content. Most trainers feel like they have found their niche, and are happy for participants and clients to choose the training that will be most appropriate for them.

One area of concern for trainers is competition from in-house science engagement training run by people who have limited experience of the wider science engagement sector, such as an academic who has participated in communication activities. Their concerns are generally around whether that person would have the same breadth of knowledge and experience as a science engagement professional.

“Sometimes the training is passed to an academic at that university who is seen to have done this kind of activity. Which in some cases is fine. In some cases, they are fantastically experienced and great people to do a little bit more training. But in other cases, there are really better providers out there in terms of professional input on this. I think it’s very patchy. I think a lot of universities if you asked them would probably tick a box saying ‘yes we train in that area.’ But how you actually judge the quality of that training I think is a bit more difficult.”

There was also some support from both trainers and clients for a centralized list of training providers. The main benefit mentioned was being able to distribute work more fairly. Trainers often refer clients to people in their networks and recognize this may not be fair, and clients would like to be able to see the full scope of the training market.

Trainers also commented on the sometimes challenging working conditions in the field, particularly around insecure employment, requests to cover topics outside their usual remit, and inconsistent rates of pay which favor white, able-bodied men. This mirrors the wider economics of UK science engagement. Some expressed reservations about staying in training, or concern about what impact these conditions were having on the capacity to achieve the societal outcomes trainers say they want.

“And I think that’s a big difference between now and 30 years ago, which was that 30 years ago, if you found somebody who made a living in science communication, they were a freelancer. And now, so many people have these paid jobs, small, badly paid jobs but nevertheless, they’re employed. They’ve been corralled, I think, they’ve been domesticated. And we’ve become rather mild as a result, I think as a profession.”

Finally, there was a huge amount of concern about the future market for training, as it is widely anticipated that university budgets will be reduced as a result of the COVID-19 pandemic. We discuss the effect of COVID-19 on trainers more in finding 9.

Comparison with the United States

On the surface, the market in the United States looks similar to the UK, where training is usually funded by a host organization such as a university. Another common market is training delivered alongside large scientific conferences or meetings, which is typically paid for by the participants, although trainers sometimes receive funding that allows them to subsidise attendance. The people hosting the training have limited input, and content is designed based on trainer interest and experience rather than skills frameworks. Competition between trainers is rarely spoken about, even when prompted.

Finding 4: Training Is Reaching Limited Sections of the Research Community

We wanted to build a picture of who is receiving science engagement training, how they find out about it, and to what extent trainers are working to include people who have been historically marginalized in science or science engagement. We found that participants are mostly self-selecting early career researchers, and that trainers have few opportunities to influence who is participating.

Most trainers have limited access to information or data about the demographics of the people they train. When asked an open question about whether they notice any differences between attendees of training, they are much more likely to categorize by career stage than any protected characteristic.

Academic researchers make up the largest proportion of participants, and they usually have science backgrounds. Some trainers reported working with researchers from humanities, arts, and social scientists. Other groups who receive science communication training include STEM businesses, technical staff, teachers, events professionals, and creative industry professionals.

Trainers identified that PhD or early career researchers are the group most commonly receiving science engagement training. It is equally common for them to be self-selecting, or mandated to attend by funding or institutions. Several trainers noted lack of uptake or reluctance from more senior researchers, but many also offered solutions such as involving external voices in a senior role, or using small group or one-on-one coaching instead of workshop-style training.

When prompted to think about training attendance by groups that have been historically marginalized or underrepresented in science or science engagement, there were varied responses. Many trainers did not have access to the data, were keen not to speculate on protected characteristics, and/or had not noticed any trends. Where trainers did notice trends, they identified overrepresentation of women and people from the LGBT+ community compared with the research community. Some mentioned underrepresentation of disabled people, and people from non-white ethnicities, and recognize this as an area where the sector needs to improve.

Very few trainers are actively targeting their training towards marginalized groups. This is mainly because participants are usually recruited by the intermediary who organizes training with little input from the trainer on how people are recruited and selected.

It should also be noted that science engagement can be an example of unrewarded additional labor taken on by minoritized groups in the research community. Some trainers reported times where they had been approached for support from people who feel under pressure to take on responsibility for science engagement in their research team. Similarly, where trainers have lived experiences of underrepresented characteristics, they do not necessarily consider themselves experts in equality, diversity, and inclusion (EDI) nor can they be assumed to be interested in training on EDI.

So, it is especially important that all trainers are effective and visible allies, and that training is a safe space for people of all backgrounds. This may mean targeting opportunities towards a broader array of identities so that people of all backgrounds feel more able to voice their experiences.

“I do remember the one I did for LGBTQ STEM Day. There were like different questions that came up and also I said, “Oh, by the way, there’s this LGBTQ science event.” Then people realizing, “Oh, so I can combine my identity with SciComm, if I want to.” … I’m trying to think in a mixed setting. Yeah, I think there’s more opportunity to talk about those things, whereas in a mixed setting, I don’t know if that’s really kind of come up as much, but it’s something where I feel as a trainer, from my personal experiences, I can talk about if that makes sense.”

There is a clear opportunity for trainers to share best practice on inclusivity – we discuss training content relating to EDI in finding 6. A few trainers mentioned how they have adapted their practice to be more inclusive, or where they wanted to take a much more active role in increasing opportunities for training. This included:

- Bypassing clients or intermediaries and offering training directly to researchers that identify with a minoritized group, sometimes as part of initiatives like LGBTQ STEM Day

- Running a pilot programme which offers long-term media training to female scientists

- Asking researchers for demographic information at the point of sign-up to ensure all characteristics are represented when space in training is limited

- Choosing a female participant to contribute first in discussions

- Delivering content in a format that suits neurodivergent people

- Delivering content in a format that suits people who do not have English as a first language

- Offering closed captioning

- Signposting resources and opportunities that do not rely on extensive previous experience, require volunteering, or assume cultural capital

Many trainers said that the move to remote delivery caused by the COVID-19 pandemic has been a driver for reflecting on how training can be more inclusive. We discuss more about the impacts of COVID-19 on training practice in finding 9.

Comparison with the United States

It is a very similar story in the United States, where people attending training are mostly self-selecting early career scientists, with little cultural or ethnic diversity. Some excellent examples of inclusive training practice include Reclaiming STEM20, which centres training specifically for marginalised scientists, or the IMPACTS programme at the North Carolina Science Festival which prioritises minoritized scientists.21 The Science Communication Trainers Network has identified broadening participation as a priority area19 and the recently established Inclusive Science Communication Symposium 22 has expanded rapidly.

Finding 5: Training Outcomes Are Measurable, But Possibly Too “Safe”

We wanted to know what trainers considered to be the outcomes of training, to what extent they incorporated peoples’ goals and motivations, and what key differences there were between people who had and hadn’t received training. We found that trainers are keen to incorporate the goals and motivations of participants, but that this can result in outcomes that serve the interests of the research community rather than wider society.

In most cases there was a disconnect between the societal outcomes that drive trainers to do their job and the outcomes they expect to see after a training session. This is to be expected, and there are two main reasons. First, it is unrealistic to expect that a single training session would equip people with everything they need for wider societal change; and second, outcomes from training need to be measurable and immediately reportable to the client.

Four things were commonly mentioned by trainers as achievable and measurable outcomes:

- A broader view of what counts as science engagement

- More awareness and sensitivity towards different audiences for science engagement

- Increased confidence with science engagement

- Intention to put the training into practice

Many trainers also mentioned that the mixed starting points of the people they train mean it’s hard to set outcomes, especially for those with a natural ability or some existing skills and experience.

There are mixed approaches to incorporating trainee goals and motivations into the content and approach. Pre-surveys are common and most trainers see it as a good way to help them frame the training. Specific motivations that trainers mentioned are common for participants included tackling misinformation, supporting young people to take up science careers, and raising the profile of their work among media or policy audiences. Some trainers reported that they begin their session with asking people to reflect on what motivates them, and how different engagement methods and audiences could fit in with those motivations.

“They ask me a lot about practical stuff, if they have an idea, and then I always bring them back to our logic model, again, trying to understand what’s the audience, how are we going to involve our public?”

There were other examples of this more strategic approach, where trainers run activities designed to help people clearly articulate why they were doing science engagement, help to link their motivations to societal outcomes, or transfer processes for engagement to different contexts.

But very often it’s the client that sets the objectives for the training on behalf of the participants, so training is geared towards the goals and outcomes that matter to them. In many cases, this means that the outcomes of training implicitly support the drivers and motivations of universities that are discussed in finding 1. Often these outcomes include preparing people to deliver activities that contribute to university publicity, improving research impact statements, or providing skills defined in researcher development frameworks.

“I tend not to ask that of the people that I’m actually training so normally that conversation does happen, but it takes place with the people that are booking me. And so, either the Engagement Manager or the Research Development Manager will speak to me and we will run through what they hope to achieve from the session…if you did want the motivations of why people might come to my training, I think one thing that’s changed is, REF and the impact agenda. I think that’s driven a lot of training.”

Therefore, trainers often frame their training around individual benefit such as skills (e.g., inclusion, evaluation) or opportunities to try delivery methods (e.g., media, events). Some trainers will frame their session around a sense of public duty or morality to reinforce outcomes-based arguments for science engagement, but it is unusual for trainers to be openly trying to achieve a defined societal change, preferring to remain neutral over the ultimate aims of science engagement.

Comparison with the United States

In the United States, training outcomes and goals are much more influenced by the participants, in a “choose your own adventure” approach to training. However, this assumption that people will successfully identify their own goal has led to a similar situation as the UK, where technical skills such as clear writing and presentation are emphasized over strategies for change. Trainers in the United States also identify feeling more confident as a common outcome of training.

Finding 6: Training Content Is Largely Transferrable and Provides a Baseline for Future Development

We wanted to find out what content was included in science engagement training, what skills were considered important, and how key concepts were introduced. We found that training content typically gives a flavor or baseline level of knowledge and skills relating to science engagement, and introduces frameworks for applying them in practice.

There was a lot of consistency in the content of training. Some trainers organize their content into modules which can be combined and delivered according to demand. In these cases, trainers notice they tend to have higher uptake for very practical, skills-based modules as compared to conceptual modules.

Most people include an overview of science communication and public engagement in their training, either as a “history of the field” or by introducing a few key concepts such as the difference between communication and engagement. This is where they tend to cover science communication theories, case studies, organizations, and key research. Most of this content is not up-to-date with the science communication research literature, and is mostly used for the purposes of background and broad introduction. It is typically included to give credibility to the approach the trainer is taking and show that science engagement is well-established and has a grounding in evidence.

Similarly, almost all training includes content on understanding and working with different public audiences, and linking audiences to the purpose of engagement. In most cases, the content isn’t linked to a single defined audience, but is designed to help people reflect on the potential audiences for science engagement. Where the audience has been pre-defined (e.g., media, festival attendees, or community groups), the training approach is less reflective, and usually presented as “what works”. A few trainers mentioned bringing audience voices into their training, either as co-delivery partners or as part of a follow-on activity.

Most training introduces project management strategies or frameworks for the participants to use. The formats vary depending on whether the training focuses on a particular skill, activity, or delivery method, but are broadly designed to prepare the participants for putting the training into practice. Examples included:

- News or press release writing structure

- Broadcast interview techniques

- Public speaking

- Project management, fundraising, marketing and budgets

- Stakeholder or audience mapping

- Using social media

Another common area of content is evaluating science engagement activities, though the approach to this varies between trainers. As with the frameworks, this changes depending on whether the training is focused on a particular skill, activity, or delivery method. Content on evaluation is often influenced by the REF requirement to demonstrate measurable impact from research. Trainers usually introduce common quantitative and qualitative evaluation techniques and give examples of how they can be applied in science engagement settings.

Alongside knowledge and skills-based content, many trainers aim to introduce mindsets, reflection, and self-evaluation as a part of their training. This comes from an acknowledgment that once the session is finished, they will have limited contact with the participants, but still want to enable them to develop their engagement practice. Similarly, some trainers also include ways to find contacts, partnerships, and networks so that participants are better supported to sustain science engagement. A few others include time-management and how to fit in science engagement alongside other responsibilities; methods for self-advocacy; and how to show leadership, influence, and drive culture change.

A few trainers include content about equality, diversity, and inclusion (EDI) topics, but this is not mainstream, and in most cases is typically framed around “reaching underserved audiences”, or references to unconscious bias, avoiding stereotypes, and running accessible events. The trainers who explicitly cover EDI usually embed inclusion throughout their content, and within any frameworks they provide (for example, equitable partnerships) rather than as a standalone session. Considering that many science engagement organizations are incorporating EDI concepts into their work, we would have expected it to feature more in training content. It could be that clients are not expecting or requiring science engagement training to cover EDI, or that people are receiving EDI training separately from other providers.

Overall, training content is designed to achieve the outcomes listed in finding 5, to build awareness and confidence, and to prepare people to do science engagement. Trainers follow a fairly standard curriculum, but place their emphasis in different places, and differ in the approaches, frameworks, tools, and activities.

Comparison with the United States

Trainers in the United States tend to be more oriented towards designing and delivering information to the public. They place most emphasis on using storytelling and narrative structures to make work engaging, listening to audiences, and crafting effective messages. Similarly to those in the UK, United States trainers include content to help people feel more comfortable with their decision to do science engagement and to challenge institutional cultures where it is not valued. United States trainers also tend to make their content transferrable across platforms and delivery methods.

Finding 7: We Know What Is Popular, but We Know Less about What Works

We wanted to understand how science engagement training had changed over time, and how training is evaluated. We found that evaluation typically captures and incorporates feedback about participant experience, but that monitoring longer-term impact can be challenging.

Most trainers could identify ways their training had changed over time, and these changes were usually motivated by trends in science engagement, feedback from clients, or feedback from participants. In terms of content, some spoke about the increased need to respond to technological changes, particularly social media; some spoke about putting more emphasis on evaluation training; others just said the quantity of content to include had increased significantly. Another commonly cited change was trainers changing their delivery methods, becoming more aware of their Unique Selling Proposition (USP), and becoming more confident. Some talked about their training becoming more bespoke while others have developed a “menu” of training to offer to potential clients. Finally, trainers noticed that participant groups used to have more of a mix of career stages and backgrounds.

There are mixed approaches to evaluation. Some interviewees said it should be “proportionate” to the time, cost, and complexity of the training. Surveys tend to be light-touch, pre- and post-training usually just asking for one to three ways in which the training has changed knowledge, confidence, or intention to act. By contrast, training given as part of an MSc or professional qualification is heavily evaluated with the input of external evaluators.

“We’re constantly trying to figure out different ways of evaluating. Ideally, we would love to bring in an evaluator, a professional evaluator to look at our evaluation forms and say, “Is this working and everything?” But again, like I said, we’re a charity which completely depends on resources. For now, it’s working quite well, we do see a change in attitudes, but the other hard thing is to make sure that people actually do the evaluation. Because you can’t control who’s going to leave midway through training for example, especially free training, people take that for granted a little bit, but we don’t want to add additional barriers to people accessing training.”

When trainers are external to the participants’ organization, there is a general view that the client should do most of the evaluating. Trainers prefer to use a combination of observation, client feedback, and more self-reflective evaluation than spend time and resource collecting data.

“Very often universities, especially if they are just bringing us in, they would have their own in-house, what they need to demonstrate to prove value. Depending on our relationship, we generally try and incorporate that or try and add value to that, whether that’s incorporating it using interactive approaches within the training, so it’s not just a form at the very end.”

Since almost all trainers think that a single training session is just a starting point, and that science engagement skills are something you build up by practice, most advocate longer-term evaluation. There are a few trainers trying to implement this. Trainers based in universities or membership organizations have begun linking who attends training with uptake of science engagement opportunities to create a “user journey” or set of case studies. Trainers who have asked participants to complete forms or attend focus groups after training have often struggled with low uptake and instead use interviews or try to build informal networks between participants.

Long-term feedback is especially difficult for external trainers to obtain unless the training involves multiple sessions (e.g., supporting a PhD program). One trainer offers to work with the client on longer-term evaluation as standard practice. Some mentioned they receive unsolicited feedback or updates from attendees months or years later. However, the move to remote training has meant there is more potential for individual follow-up with participants to give feedback on ideas they have had post-training.

As with the content discussed in finding 6, training is evaluated to measure the outcomes identified in finding 5 rather than as an indicator towards wider societal change. This fits in with the idea that science engagement training is currently used to provide a “baseline” which participants then build upon with practice and further skills development.

Comparison with the United States

Trainers in the United States are taking a similar approach to evaluation, usually including a simple satisfaction form which sometimes includes questions to measure participants’ change in confidence or knowledge. They also collect anecdotal feedback from participants and clients. Some trainers express a lack of confidence in their approach to evaluation and a desire to work more closely with evaluation professionals. Another barrier is that funding for training is insufficient to cover evaluation costs, so they struggle to make the case for extra resources when training is already expensive and time-consuming to facilitate.

Finding 8: Practice Is Part of Training

We wanted to know to what extent training prepares people to practice science engagement, and what opportunities they are directed to. We found a strong emphasis on identifying practical opportunities for engagement, and some embedded approaches, though generally, post-training support is mixed.

Almost all training includes opportunities to try out engagement skills and receive feedback from the trainer or other participants. This is seen as a good way of building confidence and intention to act. Similarly, trainers provide resources—some common examples of which are described in finding 6 —designed to help participants put the training to use independently.

Almost all trainers encourage participants to put their training in practice. Many trainers have a conversation with the client prior to training and suggest they identify some upcoming opportunities. Sometimes this works the other way around, when the trainer is brought in to prepare scientists for a particular activity. Trainers find that having a pre-defined activity is motivating for the participants and helps them to focus their session. However, some external trainers noted that this would be more effective if they had further opportunities to feed back to participants after the delivery activity.

“I think for most training, it’s probably not successful unless there’s a specific event that is around. So, we almost always try and encourage people if they’re bringing us in to try and identify an event […]. That the trainees can actually do something at because if they don’t have that focus, that generally people won’t, most people will have the training and go away and forget it until they’re then asked to do something in a year’s time or something. But then if we’re not involved in that event and observing and feeding back, I think people don’t get useful feedback on what they’ve done because perhaps it’s nobody’s job to do that […] So, I think missing that circle of learning is something that’s often not provided in-house, or obviously if it is, the person observing might not be expert in that area, so they can only observe it in their own experience.”

In a few cases, trainers do project-based training where they work with the participants over a longer period to deliver an activity and evaluate its success, such as developing science festival activities or community engagement projects.

“We did a two-day course […] And then in the evening they did an event. So, we linked up with the Festival and then we’d all trot down to the pub and then do that kind of speed dating type format where we have members of the public and groups and then the researchers all cycling around. And then the next morning, we’d all reconvene and we’d look at the evaluation from the designs, we’d look at the results from it, which we carefully analyzed. And then they would see, “Oh, I wish I’d asked that. I wish I’d done that.” And it was so amazing and it really made evaluation, which can be quite a dry subject, to a lot of people come alive because they would really understand, “Oh, I wish I’d asked that at the start.” […]. So that was a really interesting course, but it was, again, the core of it was the doing of something and then the training wrapped around it.”

If no activities have been pre-defined, trainers usually include their own suggestions of upcoming opportunities to put the training into practice. External trainers do not expect to have ongoing contact with the participants, so they often provide a progression of activities for participants to consider. This reflects that as people gain experience of science engagement, they often move from delivering engagement activities to planning, supporting, or influencing engagement. It is clear that trainers orient training towards the practical opportunities that are widely available to participants.

Some trainers mentioned the importance of ongoing support from the participants’ institution (e.g., press offices, engagement managers). But they have also noticed that the seniority, roles, and responsibilities of people who could offer in-house support can be very inconsistent, particularly in universities. In some cases, this means trainers are unable or reluctant to recommend working with institutional public engagement teams, especially if they feel they will offer conflicting advice or will only offer a narrow set of opportunities for engagement.

“I also recently ended up having a chat off the back of like some training with a researcher who has chronic health issues, and they absolutely love SciComm, and love outreach, particularly. But the message they’ve had from the university is very much the only way to do this is to stand on a stand all day. And I’m just like, “No.” And it turned out, for example, they’re interested in podcasting. I was like, “Wow, that’s great. That is something you can literally do in like short bursts of time when you have energy.’”

Comparison with the United States

Science engagement training in The United States is similarly practical, although it is much less common for there to be pre-defined or pre-identified opportunities to put the training into practice (i.e., the training offers a “rehearsal”, but there isn’t a guarantee of a “performance”). However, trainers are making efforts to keep track of their alumni through means such mailing lists for sending extra resources, assigned “buddies” as part of the training, or access to a set amount of post-training technical assistance hours.

Finding 9: 2020 Was Unexpectedly Busy

We wanted to understand how trainers had been affected by the unsettling events of 2020, including the COVID-19 pandemic and the murder of George Floyd and the resulting prominence of the Black Lives Matter movement. We found that trainers were quick to adapt their activities and sustain their income, and are reflective about the societal shifts we are going through.

Despite many other science engagement activities being postponed or cancelled, and initial feelings of shock and uncertainty, most trainers found their volume of work increased in 2020. Some described training as a “lifeline”, particularly during national lockdowns, and that it subsidized the lost income from other work. The increased demand for training was mostly due to researchers being away from laboratories or fieldwork, and having more time to take part in training.

For some, this increased workload brought challenges with balancing other commitments or maintaining physical and mental health. But for many, delivering training was the most fulfilling and successful part of 2020. Almost all training was delivered online, and most trainers were able to adapt the timing, content, and approach to suit remote working. Some trainers already had some experience in online events, but others found they quickly had to upskill and that adapting their content took a huge amount of time and effort.

Some ways in which training changed included:

- Asynchronous delivery

- Fewer time constraints

- Opportunities to practice skills in a familiar home environment

- Co-delivery of training

- Offering training direct-to-consumer rather than via an institution

- Collaborative tools like Padlet or Miro

Many trainers find online training enjoyable and fulfilling. They mentioned benefits such as flexibility and inclusivity as compared to face-to-face training, and, once they were familiar with the platforms, the opportunity to innovate and offer different experiences to participants. The most common negative aspect was that trainers find it much harder to build community among participants. Some trainers suggested it would be useful to share best practices for online training, for example what group sizes, attention spans, and interactivity others are working towards.

A few trainers mentioned the murder of George Floyd and the resulting prominence of the Black Lives Matter movement as having an impact on their work, in some cases inspiring them to incorporate anti-racism into the content they were delivering.

Another theme was using the lockdown period to rethink certain activities and plan for the future. In a few cases, trainers stepped back completely and concentrated on creating new strategies, or supporting colleagues on what they saw as higher priority work.

“And then there was just a small core team that continued to work on through, but it actually turned out in a way to be really quite beneficial for us because we gave everybody a chance to kind of stop and look at what are we doing and how are, and I think for a long time, we were just trying to do absolutely everything. We were trying to cover every single theme in science and for no apparent reason, we would do a show on forever kind of thing. And so, it’s given us a chance to sort of hone in and look at right, what are we here for? And start to define our work a little bit more.” (Interview 21)

Trainers also spoke about the difficulty of balancing work with personal lives, feeling isolated or exhausted, being on furlough for long periods, and coping with constant uncertainty. This has taken a toll on well-being. Trainers have generally felt supported by their peers and professional community, and consider themselves more fortunate than many others in science engagement and wider UK society.

“What a question, I’ve had a horrible year. Honestly, much of it has been good. I’ve been really proud of some of the work I’ve done. I’ve met some wonderful people in different ways… There’s all sorts of things that have gone really well. But I have found this year really hard and I find the dichotomy quite difficult to get my head around sometimes because I love online training it turns out. I’ve delivered more training this year than I have done for many years. And that’s been a fantastic part of what I’ve done. At the same time, I find it difficult to get up in the mornings and I’m finding just concentrating on my tasks quite hard. So, I don’t always feel like being a presenter at the moment. It takes extra emotional effort to be that person leading a session. And I’m much more tired afterwards.” (Interview 4)

Despite a better-than-expected 2020, many trainers expressed concern that opportunities will decrease in the coming months as universities reduce their spending internally and externally, and as redundancies in science communication organizations lead to an increase in freelancers looking for work.

Comparison with the United States

Our interviews with North American trainers were conducted in 2017, so there is no direct point of comparison for this finding.

Finding 10: Trainers Want More Structure and Recognition, But Not Necessarily In the Form of Frameworks or Accreditation

We wanted to understand whether trainers saw benefits in collating best practices or creating a quality framework for science engagement training. We found that trainers are naturally reflective and seek out best practice, and that some felt the conversation about frameworks trainers had happened many times and was still unresolved, whereas others had given it little thought.

Most trainers are naturally reflective, enjoy thinking about their own practice, and actively build it into their ways of working. Therefore, many are interested in conversations around quality, best practices, charters, or frameworks. But opinions on whether the training community should have a centralized quality mark, charter, or set of standards are mixed.

Benefits included working within some agreed structures and definitions, a shared understanding of terms, an ability to procure and obtain work more equitably rather than relying on personal contacts, and having some resources to guide their practice. It was felt that some existing frameworks and definitions (such as NCCPE) were fit for introductory training sessions, but were not transferrable across all contexts. At the same time, trainers felt it would be pointless for them all to be developing frameworks individually, and that a lot of time could be wasted debating what to call things.

However, there was little support for formal accreditation that duplicates the way MSc or other academic courses already do quality control, that standardizes content, or that creates extra barriers for people who organize and deliver training.

“It’s the same way that we should be developing our public engagement activities in response to the audiences that we want to deliver to. I consider the training development to be the exact same scenario. What is it people actually want? So, I think if we start going through that accreditation process, there’ll be some aspects that are positive. The quality would hopefully improve. I think we would lose the ability to be quite responsive to what people require and yeah, I would ask then who gets to judge whether my course is any better or worse than somebody else’s course based on what people are asking me to deliver. So, I don’t know. I can see the benefits of it, but I can also see that it would possibly hamstring people, and so there being a few accredited providers that then everybody has to use.”

Many trainers also felt the most effective aspects of their training are when they give bespoke and individual feedback, which is not something that could fit within a framework. They suggested that two trainers could deliver the same content and it would be a totally different experience for the participants. Even though trainers interact and do not feel competitive, there is a reluctance to share content between one another.

It is also clear that shared frameworks, accreditation, or best practices are not something all trainers have thought about, and there is a risk of excluding people or approaches. Therefore, a first step towards any framework would be to support interaction between trainers.

“I would love us to be a much more cohesive community. And I think actually, lots of people want to be a more cohesive community. But the people that have the opportunity to help be a more cohesive community are the people that are backed by big organizations because they get big funding, and they get lots of support to be able to do these projects. And the people who are going to be most offended by somebody doing that is somebody who’s not part of those big organizations. So, I think the really difficult part is to bring all the freelancers and smaller companies together with the bigger organizations.”

Another suggestion was that process and outcome-led frameworks were most necessary and could have the biggest impact on training (more so than sharing content or evaluation).

Comparison with the United States

Similar conversations are happening in the United States about whether to formalize or accredit training. The Science Communication Trainers Network was set up to provide a centralized resource, and launched a charter in 2019 to guide practice.

METHODS

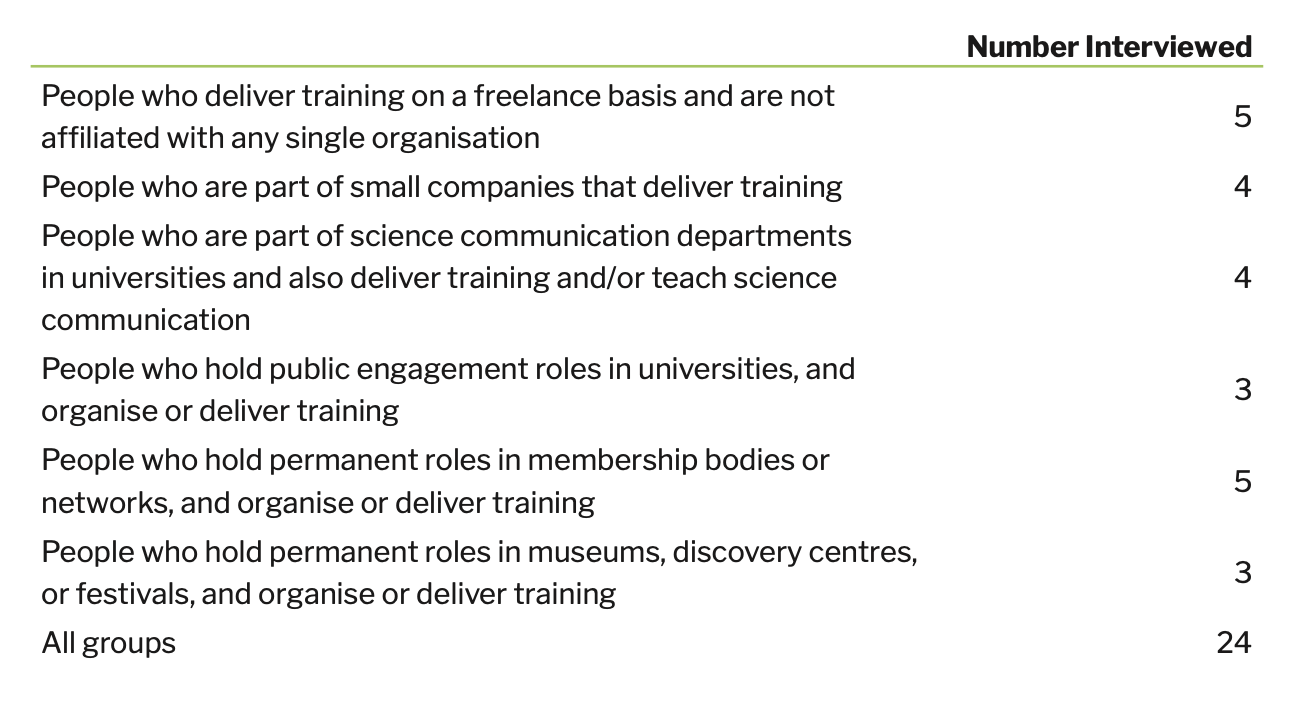

We conducted 24 interviews between November 2020 and February 2021, all using video conferencing software. Interviewees were chosen through known contacts and snowball sampling.

We contacted 43 people with an invitation to interview. Of those who did not take part in the study: 11 did not reply, 3 had moved roles and/or felt they were not suitable for the study, 2 could not find a suitable time to take part, and 1 would only take part if they received payment.

Interviewees represented the following groups in the UK science engagement training community:

Prior to the interviews, we obtained informed consent and background information through an online questionnaire. The background survey revealed that around 6 in 10 people reported spending most or all of their time planning, conducting, or evaluating training.

We asked which academic fields trainers identified with (they could select more than one). Over 5 in 10 identified with biological sciences, 3 in 10 identified with social sciences or policy, 3 in 10 identified with physical sciences. Around 2 in 10 commented that they identify across all disciplines.

We also asked demographic questions. Around 6 in 10 identified as female, just over 8 in 10 indicated they were white, and around 1 in 10 reported they had a disability or long-term health condition. More than 7 in 10 were educated to postgraduate level in any subject.

The interviews followed a semi-structured format and attempted to address the following research questions:

- What formats and contents are typical?

- Who is getting trained?

- How is training evaluated?

- Why do trainers choose this career and what supports them in their professional development?

- How connected is the training community?

- What is the link between universities and other providers?

- How has the COVID-19 pandemic affected training?

We compared the responses with similar interviews with United States-based science communication trainers, conducted by our research team in 2017 and 2014. The findings presented in this report are based on initial thematic analysis and should be viewed as tentative. Deeper analysis will be conducted during the coming months.

APPENDIX 1: OPPORTUNITIES FOR US-UK LEARNING

Throughout this research report we have drawn comparisons with interviews with US-based science engagement trainers, conducted in 2014 and 2017.10 11 12 Although this landscape study developed in different ways, notably around the influence of centralization and funding in the UK, and key organizations in the US, there are many useful comparisons to be drawn. In this section, we briefly summarise the similarities, common challenges, and progress towards more effective training.

Similarities

Although there was a lot of overlap, we noted major similarities between US and UK science engagement training in three areas: format, participants, and trainer development. These similarities are unlikely to be unique to science engagement, but instead are indicative of general trends in training and development which the trainers in our studies have adopted. However, they still provide useful starting points for imagining opportunities for collaboration and mutual learning between US- and UK-based trainers.

Format

The dominant mode of training is a workshop-style delivery of content; although training often takes place within the participants’ home institution, it usually takes place out of the context of research or engagement environments. For this reason, training is highly practical and focuses on familiarising participants with the types of science engagement they are likely to encounter, or have expressed interest in. Most training is delivered as a one-off session in mid-sized groups over a period of a few hours to a few days.

Participants

Typical participants are at early career stages who have a science background and are interested in taking the first steps in science engagement. Both US and UK trainers note a lack of ethnic and cultural diversity in training participants.

Trainers

Trainers in the US and UK have followed similar career routes into science engagement and training. They are highly educated, but don’t usually hold formal qualifications in training, instead mostly learning training “on the job”. There is inconsistent access to development opportunities or interaction between trainers.

Common Interests and Challenges

There were also similarities in the areas of interest and challenges faced by US- and UK-based trainers. These tended to be linked with a desire for more coordinated approaches to science engagement training, and a bolder vision for what it can achieve.

Evaluation

Assessing the effectiveness of training presents an ongoing challenge, particularly building a picture of the long-term effects of training on participants’ behavior, and their likelihood to continue to develop science engagement skills with practice. As the approaches and delivery methods of training become more advanced, the capacity and funding to evaluate are lagging behind. Trainers are interested in evaluation and are naturally reflective, but do not necessarily think of long-term evaluation as their responsibility, nor do they want a quality framework imposed on them. In the absence of a shared quality framework, our research indicates that effective long-term evaluation relies on strengthening relationships between trainers, participants, and organizers or clients and a better understanding of the different motivations at play.

Frameworks or accreditation

Many people and organizations involved in science engagement are interested in whether quality-based or standards-based approaches to training could improve practice. Trainers would value more structure and clarity, but also worry about “over-standardisation” and who would decide what “good” looks like. Within this there are potential tensions over approaches to science engagement training: whether it is to transmit a body of knowledge and skills about science engagement to participants, whether it is to enable participants to define and shape science engagement through practice, or whether it should attempt to do both. Our research indicates that trainers often indirectly use frameworks or quality standards to guide their approach, and want participants to learn from previous practice in science engagement. This indicates that at the very least, a quality framework could be a shared set of resources or tools that have informed training practice to date.

Approach to goals and outcomes

There are tensions between how much influence trainers, participants, and organizers or clients have on the goals and outcomes of training. Trainers are reluctant to identify the specific long-term goals for science engagement that their training works towards, or even short-term outcomes, yet they can identify motivating factors that have led them to believe training is worthwhile. It is unclear what effect this has on the participants of training, and whether a more strategic approach would be valuable. However, our research identifies an opportunity for trainers to test different ways of framing the goals and outcomes that may be possible, or to create a resource for participants to better plan towards engagement goals.

Emerging Best Practice and Initiatives

Here we briefly describe four areas in the US and the UK where trainers are experimenting with new practices or systems that could improve the field.

Connecting training professionals

The US-based Science Communication Trainers Network was set up to address a collective desire for more interaction between trainers following a series of workshops in 2017-18. It attempts to build communities of practice, begin conversations about professionalization and quality, and broaden participation in training. The Network is administered by a core group of individuals, supported by sponsorship from the Kavli Foundation and the Chan- Zuckerberg Initiative. Sub-committees of the Network organise convenings between members; expand membership; collect best-practice on diversity, equity, and inclusion; map the network; identify resources to share; and help trainers apply findings from science communication. Whilst the Network is in its early stages, perhaps too early to assess effectiveness, our research found broad support for a similar or parallel initiative in the UK.

Positive action on equality, diversity, and inclusion

The Reclaiming STEM workshops20 were set up to put marginalized identities and experiences at the center of science engagement training. The workshop content typically spans research and social justice, but include more specific science engagement topics and communication methods such as policy, communication, advocacy, and education. The workshops also serve to elevate voices that are traditionally underrepresented in science engagement, and as a safe space for marginalized groups to discuss challenges or expectations they are facing. Our research indicated that a few individual trainers in the UK are beginning to take positive action to diversify training participants, and Reclaiming STEM reinforces how successful these efforts could be.

Similarly, the Inclusive Sci Comm Symposium22 and landscape report23 at University of Rhode Island aim to bring together researchers and practitioners in science communication and engagement whose core activities are grounded in inclusion, equity, and intersectionality.

Challenging incentivization structures

Some UK universities have made efforts to reframe training, create their own incentive structures, or improve uptake. These approaches are indicative of the move to embed public engagement within academic life.

Imperial College London’s Public Engagement Academy sought to address gaps in knowledge, skills, and priorities between those setting institution-wide public engagement strategies and the experiences of public engagement on a departmental and individual level. The organizers emphasized the importance of having the time and space to understand the different perspectives on engagement across the university and the wider sector, and put relationship-building at the core of the program. The Academy gave participants an increased sense of agency and confidence in the future directions their engagement activity could take, but also highlighted the challenge of navigating the structural differences and competing incentive structures of engagement practitioners and researchers. They found that a positive first step was to take the time to build community and understanding across the university, rather than relying on increased capacity or capability to fix cultural differences. Our research supports these findings, and identifies a second “gap” in knowledge, skills and priorities in between universities and external trainers.

The University of Bath Challenge CPD project aimed to take a broader look at the design, delivery, and value of continuing professional development (CPD) and public engagement. They noted a growing demand from researchers for more advanced public engagement training, and for support that goes beyond the “push-out-of-the-door” or one-off workshop approach. They also noted that as the profile and status of public engagement grows, a lack of definition of what quality engagement looked like and how it fitted into other aspects of academic identity was an ongoing barrier for researchers. Six markers of “quality” CPD were identified, which if demonstrated consistently could improve uptake and recognition:

- Networks: building cohorts of practice

- Big picture: Coherent

- Rigorous and high quality: plays to people who are short on time, apprehensive of value

- Enduring: something you take away and return to

- Change: challenges thinking and behavior

- Active, timely, and relevant.

Our research confirms that there is an appetite to move beyond a workshop model of training, especially in the context of COVID-19 where face-to-face delivery models have been disrupted.

Embedded training models

Trainers based in the UK have experimented with experiential training models which US trainers could adapt or implement. For example, Glasgow Science Festival deliver an annual program “GSF in Action” in which PhD students collaborate with the Festival and local community over a period of 3-months to co-design and deliver hands-on family activities. The program begins with 3 days of development, in which a community partner will discuss what their audience is and what their service users would like. Participants then use this as a basis for developing content, an event, all the project management, budgetary control, and marketing. Following on, the participants have a practice day and a delivery day as part of the Festival, plus asynchronous group work. Our research showed that this experiential approach is becoming more widely used by UK science engagement trainers, especially when they have strong relationships with universities and delivery opportunities such as Festivals, and can act as a broker or connector.

APPENDIX 2: INCLUSIVE PRACTICE AND EQUALITY ANALYSIS

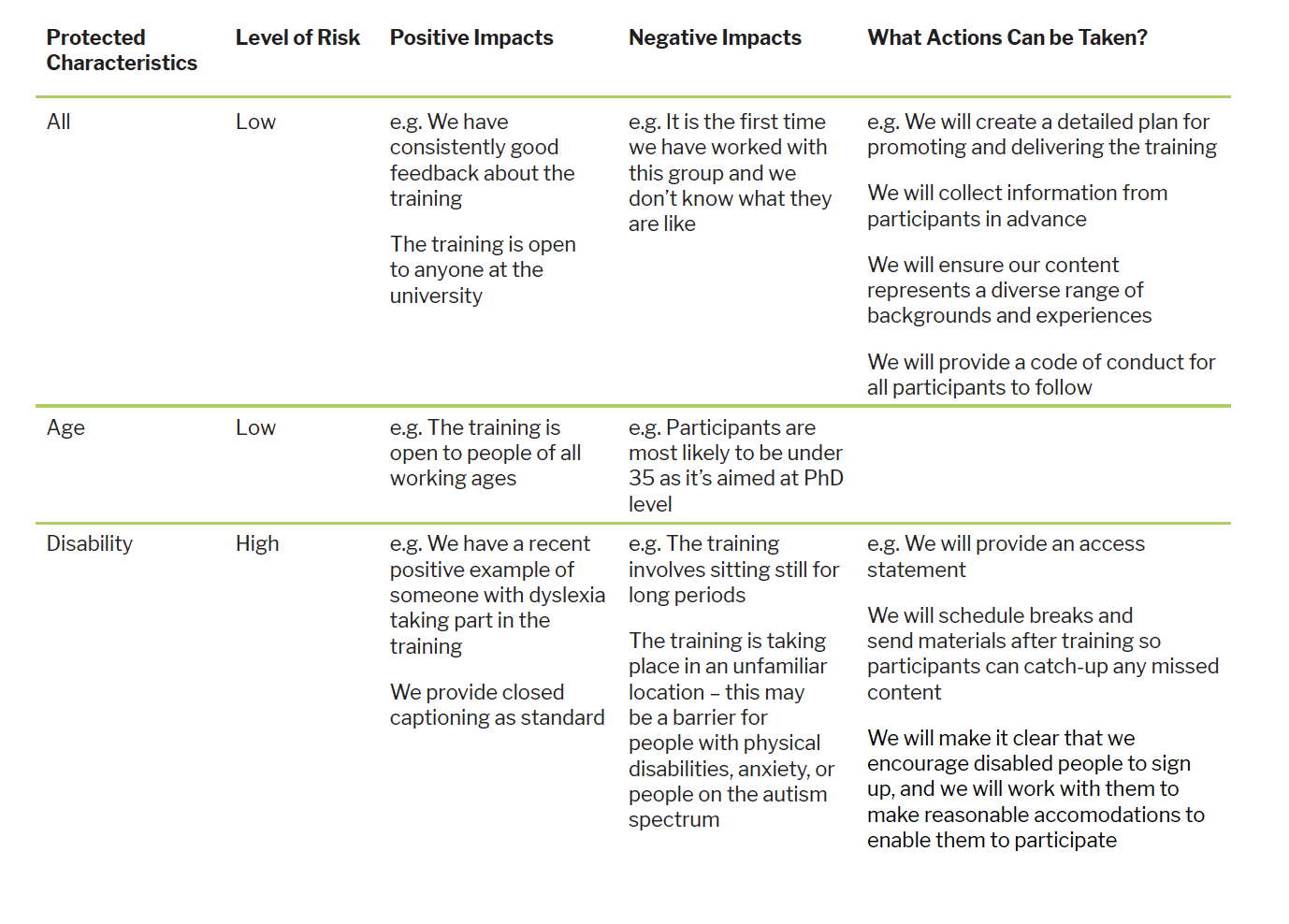

We have suggested a list of actions trainers can take to be proactively inclusive, and that can apply across all training contexts. For trainers who are usually external to the organization where training takes place, this could involve working with clients to ensure all of these steps are followed.

We suggest the following should be done before training takes place:

- Ensure your organization has an up-to-date diversity policy

- Ensure there are access statements for all training environments and types of content

- Use a tool such as Equality Analysis to identify and plan for issues that may arise for people with protected characteristics (see template)

- Collect appropriate demographic information at the point of sign-up, and to benchmark it against your organization or department24

- Advertise the training to any networks or contacts that are likely to include traditionally underrepresented groups